WebRTC can work Peer-to-Peer and Peer-to-Server, where the peer is usually a browser or a mobile application. In this article we describe how WebRTC works in the Server-to-Server mode, what this mode is for and how it works.

Scaling, Origin-Edge

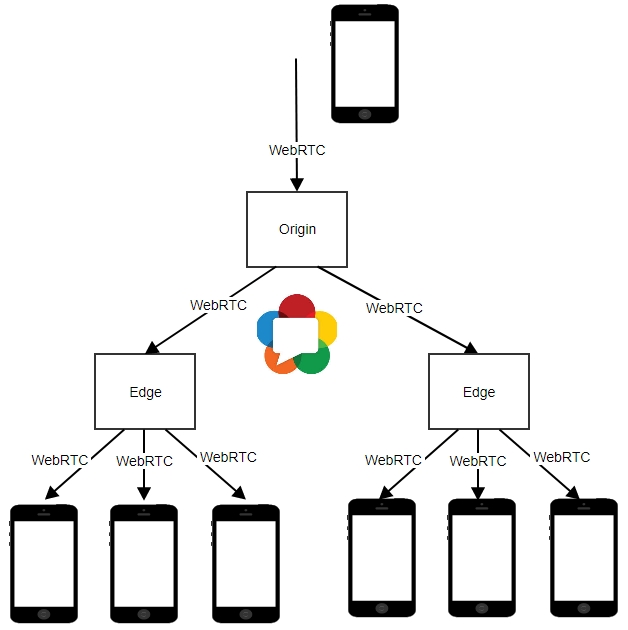

What are possible uses for Server-to-Server WebRTC? The obvious answer is the Origin-Edge pattern that is used to scale the broadcasting to large audience.

- A user sends a WebRTC video stream to the Origin-WebRTC server from a browser or a mobile device.

- The Origin-server sends the stream to multiple Edge servers.

- Edge-servers broadcast the stream to end users to browsers or mobile applications.

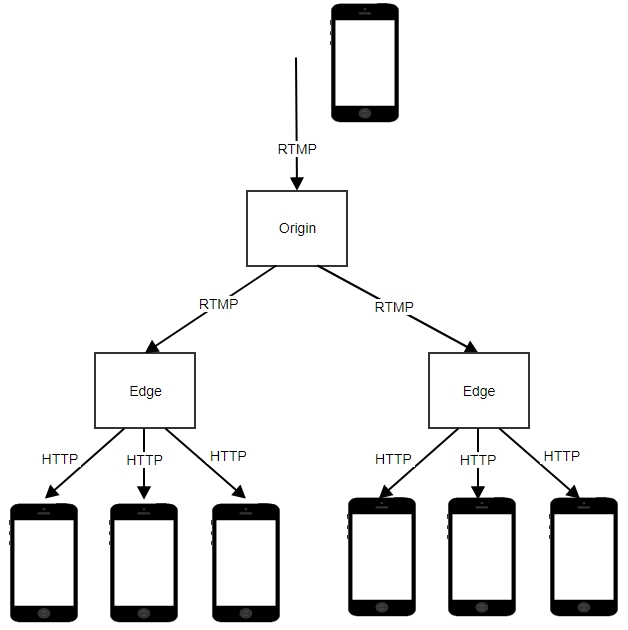

In modern CDN, delivery of video is performed using the RTMP protocol to publish the stream on the Origin server and sending the stream to Edge servers, while end users receive the footage via HTTP.

The advantage of WebRTC over the described approach is guaranteed low latency of the broadcast unachievable using RTMP / HTTP, especially if nodes are geographically distant from each other.

However, for us Server-to-Server WebRTC started not from scaling.

Stress-tests

They are widely used in one way or another. Automatic or semi-automatic, synthetic and emulating user activity. We used and still use stress-testing to catch multi-thread bugs, control leaks of resources, optimization and many other things that cannot be achieved using conventional testing.

For the latest test we deployed GUI-less Linux servers in the cloud and ran scripts that started many processes of the Chrome browser on the X11 virtual desktop. This way we were running real WebRTC browsers that were fetching and playing WebRTC video streams from Web Call Server therefore creating load very similar to the real load of the server. For the server, this process looked like a real user opened a browsers and retrieved the video stream completely utilizing the WebRTC stack of the browser including video decoding and rendering.

The bottleneck of this testing method was performance of the test system. It is quite difficult to run many Chrome processes on one Linux system even with tons of RAM and CPU. As a result, we had to deploy multiple server and control and manage their operation.

Another limitation was insufficient flexibility – we could not control Chrome processes. There were literally two operations we could do:

- Run a process and open the URL.

- Kill the process. Upon opening the URL, an HTML page was loaded and connection to the server was established automatically using JavaScript, Websocket + WebRTC. This way we simulated the load caused by spectators.

We needed a flexible high-performance tool for stress-testing that could allow us to load up the server with real load and to control testing using software.

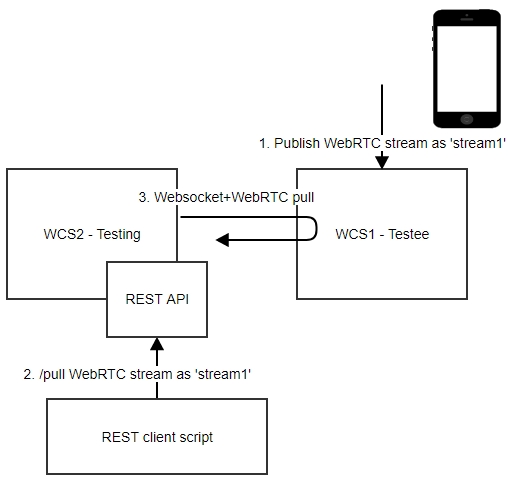

Testing Server-to-Server

So, we concluded that nodes of our server can generate the required load themselves as long as we properly connect them to the tested servers.

We implemented this idea as WebRTC pulling. One WCS server can fetch a stream from another WCS server via WebRTC. To do this, we introduced internal abstraction, WebRTCAgent – and object that deploys on the testing node and fetches the WebRTC stream from the tested node by connecting to the tested node via Websocket+WebRTC.

Then, we moved control over WebRTCAgent to REST. As a result, the load of stress-testing was reduced down to invocation of /pull methods on the REST interface of the testing node.

Using the Server-to-Server WebRTC we managed to increase overall performance of stress-testing sevenfold and significantly reduce resource usage compared to the Google Chrome process running.

So we managed to fetch WebRTC streams from other servers. The testing server connected to the tested one via Websocket and behaved like a well-doing browser establishing ICE connection, DTLS and fetching SRTP streams. The result: true WebRTC pulling.

Little were needed to get a full-featured Origin-Edge model. Specifically, we needed to deliver this pulling to the WCS server engine making it look like a stream from a web camera. The WCS server already can broadcast such streams through any supported protocols:: WebRTC, RTMP, RTMFP, Websocket Canvas, Websocket MSE, RTSP, HLS.

Origin-Edge on WebRTC

Turned out that we were developing Server-to-Server WebRTC for stress-testing, but ended up with the Origin-Edge implementation for WebRTC broadcasting scaling. Here is how it works:

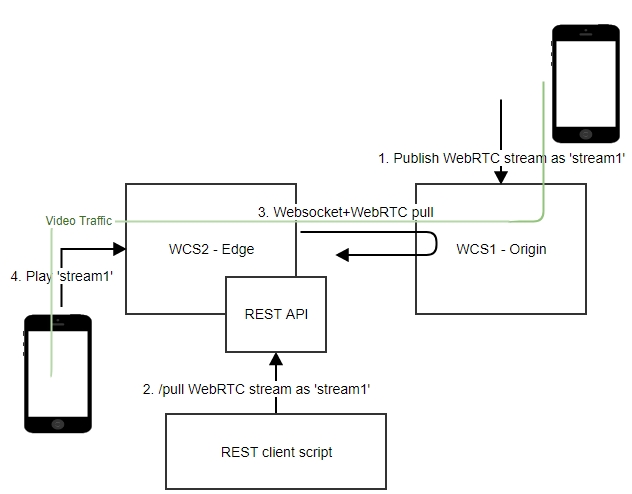

The green line marks the path of the video traffic.

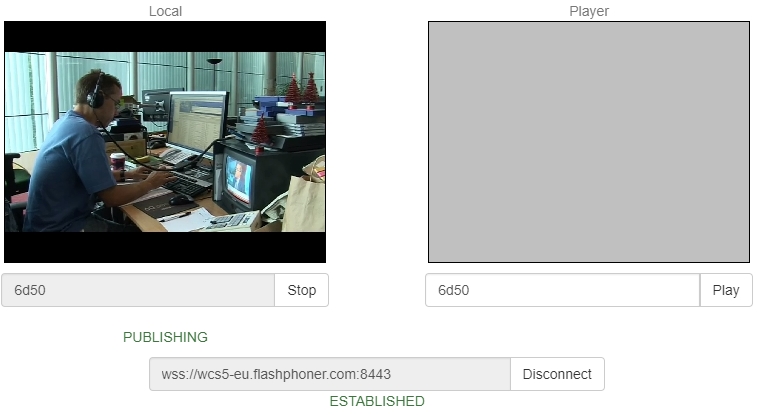

1. A user from a browser or a mobile application uses the web camera to send a WebRTC video stream named stream1 to the WCS1 – Origin. Sending the video stream using the web example Two Way Streaming looks as follows:

And here is a JavaScript code that performs publishing of the video stream using Web API (Web SDK):

session.createStream({name:'steram1',display:document.getElementById('myVideoDiv')}).publish();

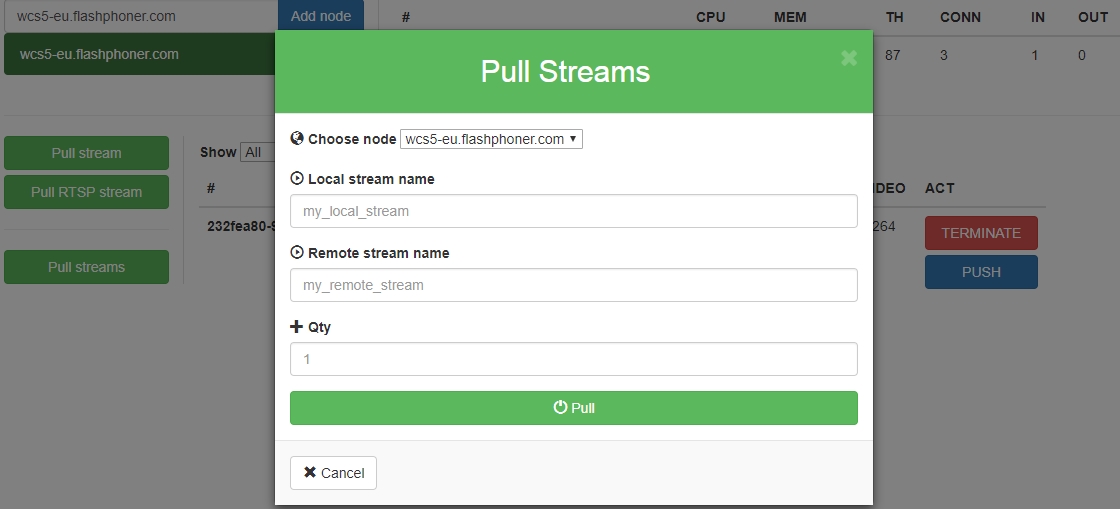

2. Then, we ask WCS2 – Edge using REST/HTTP API to fetch the video stream from the Origin server.

/rest-api/pull/startup

{

uri: wss://wcs1-origin.com:8443

remoteStreamName: stream1,

localStreamName: stream1_edge,

}

The WCS2 server connects to WCS1 via Websockets at wss://wcs1-origin.com:8443 and receives the stream named stream1 via WebRTC.

After that we can execute the REST command

/rest-api/pull/find_all

To find all existing pull-connections,

or the command

/rest-api/pull/terminate

To close pull-connection with the Origin WebRTC server.

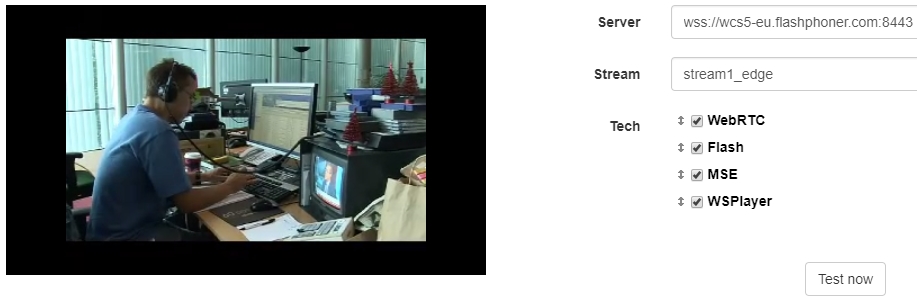

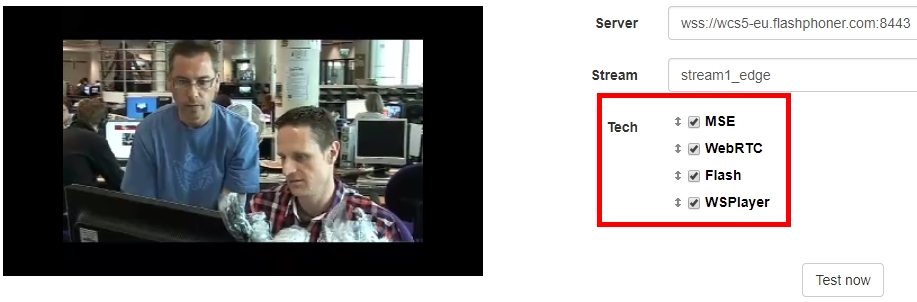

3. Finally, we fetch the stream from the Edge-server via WebRTC in the player. Enter the name of the stream, stream1_edge and play it.

The WCS server support several ways to play the stream. To change the technology it uses, simply drag MSE or WSPlayer up to play the stream using MSE (Media Source Extensions) or in WSPlayer (Websocket player for iOS Safari) instead of WebRTC.

Hence, the Origin-Edge scheme worked fine, and we received low-latency scaling of the WebRTC server.

Note, that scaling worked before too, when RTMP was used. The WCS server can republish incoming WebRTC streams to one or more servers via RTMP. In this case, the support for Server-to-Server WebRTC is a step to lower overall latency of the entire system.

Once again to stress-testing

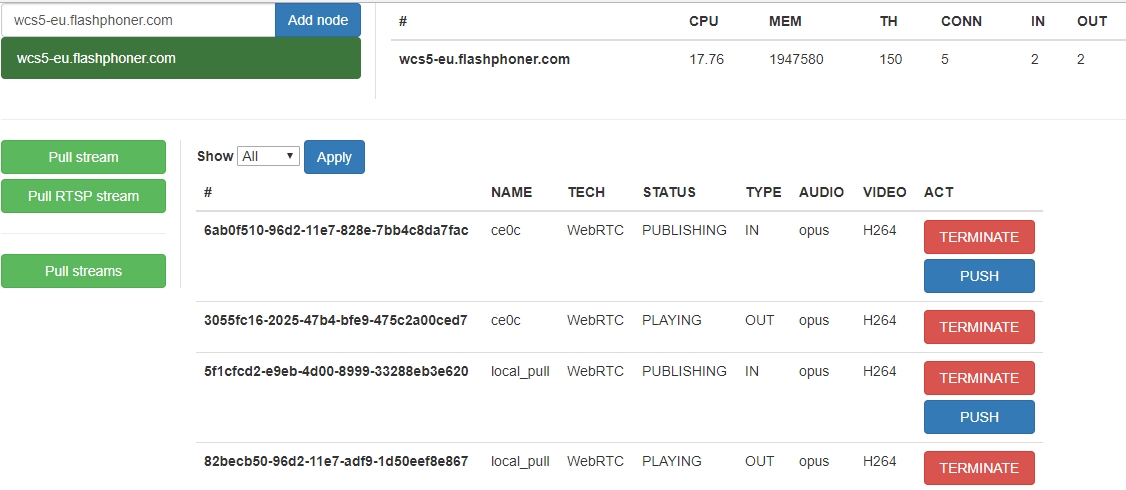

For normal stress-testing we needed to create a Web interface as a REST client to manage pull sessions. We called the interface Console, it looks as follows:

Using the Console you can fetch individual WebRTC streams using the current node as an Edge server.

Using the same interface you can add one or more nodes and run stress-testing for performance evaluation.

The work is not over yet, more testing and debugging is to be done. In particular, we want to tinker with dynamic bitrates on the Server-to-Server WebRTC channel and compare Server-to-Server flow with that of RTMP. But today we already have Origin-Edge on WebRTC and proper stress-tests producing load that is close to real. And that’s excellent!

References

WCS5 – Web Call Server 5 mentioned in the article

Two Way Streaming – an example of video stream broadcast from a browser

WebRTC Player – an example of playing a video stream in a browser with ability to switch between technologies: WebRTC, MSE, Flash, WSPlayer