In this article we will try to summarize everything we know about latency of online broadcasts from the web camera of a browser: what causes the latency, how to avoid it, and how to make an online broadcasting truly real time.

Then, we will show what happens to latency through the example of a WebRTC implementation, and how using WebRTC allows keeping latency comfortably low.

Latency

Even 1-3 seconds of delay in communication causes slight discomfort to both side of the talk. The lag is noticeable and needs consideration. When you know about the lag, you talk like you would on the two-way: speak, then wait while the other person “rogers”. You can often see a talk like that on television, when a field journalist reports to the studio:

– John, can you hear me? John?

– 3 seconds later…

– Loud and clear, Oprah.

– 3 seconds later…

– John, what the hell is going on? John?

Common myths about latency

Below are three most common misconceptions about latency and video transmission quality.

I have 100 Mbps

1. I have 100 Mbps, so I should not experience any problems.

In fact, it does not matter what figures your Internet provider claims to deliver in its advertising. What matters is real bandwidth between your device and the remote server or device adjusted for all intermediate nodes the traffic passes through. The provider physically cannot guarantee 100 Mbps to any arbitrary node on the Web. Even 1 Mbps is not guaranteed. Suppose, the person your are communicating with is located somewhere in Brazilian countryside, while you broadcast from a datacenter in the City of London. Whatever your bandwidth really is, it is not the same for the other side of the communication.

Therefore, the unpleasant truth is: you don’t really know the real bandwidth between you and the other person, and even your internet provider does not know this, because this is a floating value that is different at any given moment and for any given host you may exchange information with.

Aside from pure bandwidth, there is also jitter – deviations in timings between packets. You can smoothly download terabytes of data or even enjoy high results in Speedtest, but for real time playback with minimal latency it is extremely important to receive all packets in time.

It would be ideal if packets arrived exactly at the time when they need to be decoded and displayed on the screen – with millisecond precision. But the network is not ideal, it has jitter. Packets come irregularly: some are late, others come in groups which requires dynamical buffering for smooth playback. Skip too much packets, and the quality drops. Buffer too much packets, and latency increases.

That is why, if someone sports his good and fast connection (in the context of real time video transmission) – don’t buy this. Any node of the network at any given moment can apply limitations or hold packets in queues, and you cannot do anything with it. You can’t just tell all nodes along the way of the packet, like “Hey, don’t drop my packets. I need minimum latency now”. Well, to be precise, you can do this by specifically marking the packets. But there’s still no guarantee the nodes of the network the packet comes through will consider this instruction.

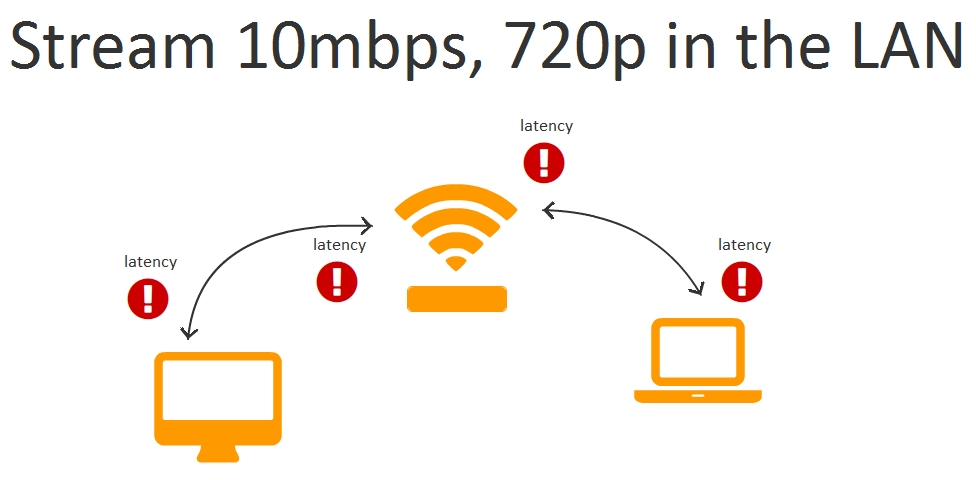

I have LAN

2. There should be no latency in my local network.

Indeed, in a local network latency is less probable. Simply because there are less nodes the traffic goes through. Typically, there are at least three: sending device, router, recipient device.

These three devices has their own operating system, buffers, network stacks. What if the sending device actively seeds torrents? Or if the network stack of the server or the CPU are loaded with other tasks. Or if there’s a wireless network in the office and several employees watch YouTube in 720p?

If the video stream is heavy enough, like 10 Mbps, a router or another node can easily start dropping off or holding packets.

Therefore, latency in local networks is possible too and depends on the bitrate of the a video stream and network capabilities.

It is worth mentioning, however, that such problems are rare in an average local network compared to global networks and are often caused by network overloads or problems with hardware or software.

Summarizing, we claim that any network including local ones is not ideal, and can hold or drop video packets, and in general there is no way for us to influence on how often and how many packets will be dropped.

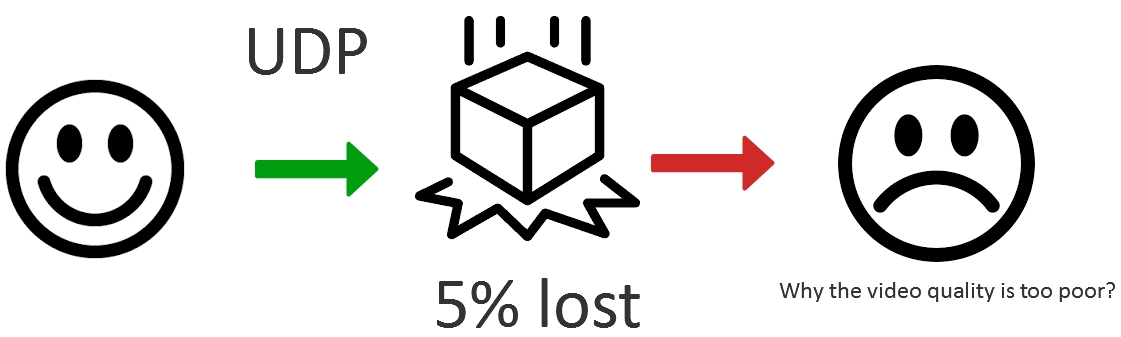

I have UDP

3. I use UDP, and UDP has no delays.

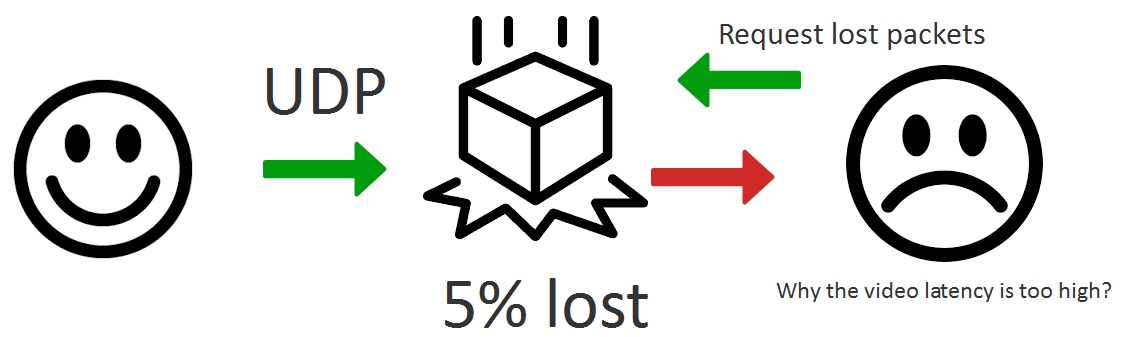

Packets sent via UDP can also be hold or lost on network nodes, and if received packets are not enough to assemble the video and decode it, the packets will be requested again leading to playback latency.

Protocols

Lyrics aside, there are literally two protocols browsers can work with: TCP and UDP.

TCP is a guaranteed delivery protocol. This means that sending a packet to the network is an irreversible operation. If you sent data to the net, they wander there until they reach the destination or until the TCP connection timeouts. This is the main cause of latencies while using the TCP protocol.

Indeed, if a packet is hold or dropped, it is sent over and over until the recipient confirm arrival of the packet and this confirmation reaches the sender.

The following protocols / higher level technologies are based on TCP and are used to transmit Live video on the web:

- RTSP (interleaved mode)

- RTMP

- HTTP (HLS)

- WebRTC over TLS

- DASH

All these protocols are guaranteed to result in high latency in case of network problems. Note that these problems may be caused not by the sender or by the recipient, but by any arbitrary intermediate node. That is why checking the network on both sides is often uninformative, because 5 seconds latency may be caused by something in between.

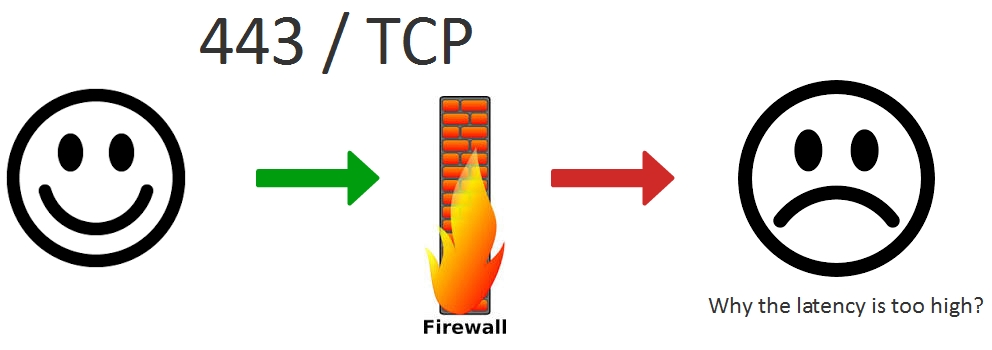

Taking into account what is said above: any network is not ideal, it can hold or drop video packets, we can conclude that minimum latency requirement forces us to ditch any protocols based on TCP.

And it is not easy to just stop using TCP, actually. In many cases there are no alternatives. For example, if a corporate Firewall closed all ports except 443 (https), the only way to transmit the video is to tunnel it to https by sending video packets via the HTTPS protocol based on TCP. In this case we will have to deal with unpredictable latency, but at least the video will be delivered.

UDP is a non-guaranteed delivery protocol. This means, if you sent a packet to the network, it may be held or lost, but that does not prevent you from sending other packets, and the recipient from receiving and processing them.

The advantage of this approach is that the packet is not sent over and over waiting for the guaranteed confirmation from the recipient as it is with the TCP protocol. With UDP, the recipient decides whether it waits for all the packets in the proper order, or works with what it already has. TCP does not provide such level of freedom for the recipient.

There are not as many UDP protocols to transfer video:

- WebRTC over UDP

- RTMFP

Here, by WebRTC we mean the entire UDP-based stack of protocols of this technology: STUN, ICE, DTLS, SRTP. All of them work via UDP and as a result provide transfer of video via SRTP.

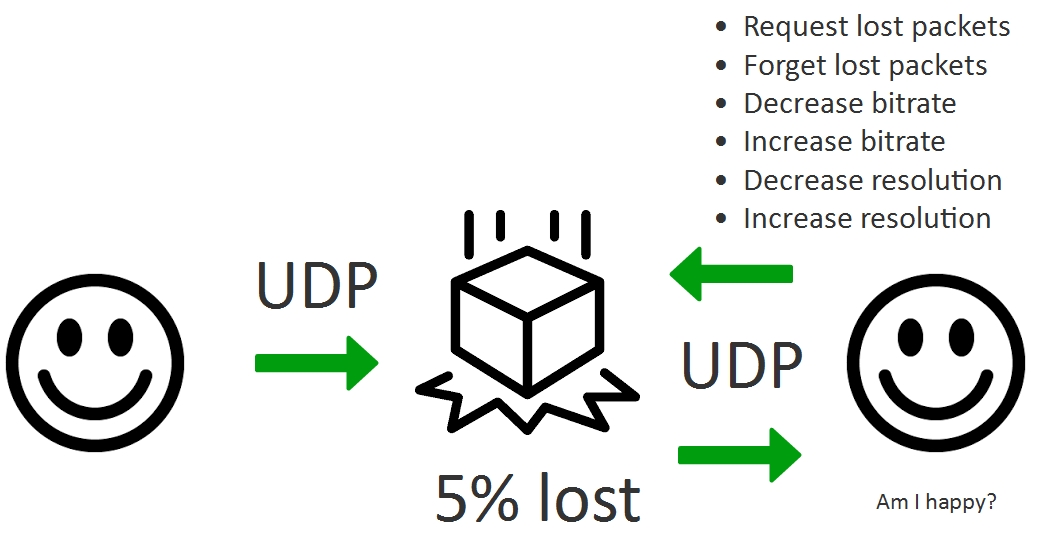

Therefore, using UDP we can deliver packets fast, with partial losses. For instance, we can lose or temporarily hold 5% of sent packets. The advantage here is that we ourselves, on the application level can decide whether we can go with the rest of 95% of received packets to display the video correctly, or we should request them (also on the application level) as many times as we need to obtain the desirable quality of the video and keep the latency below the threshold value.

As a result, the UDP protocol does not remove the need to resend packets, but allows to organize resending more flexibly by providing balance between quality of the video (damaged by lost packets) and latency. You can’t do this in TCP, because it guarantees delivery by design.

Congestion control

Above, we said that no network is ideal, so it can hold or drop packets and we cannot influence this.

Our short review of protocols was not very optimistic and the smiley is sad, but at least we concluded that low latency requires using the UDP protocol and implementing resending of packets thus balancing with video quality.

Indeed, if our packets are dropped or held, this could mean we send too many of them per unit time. And if we cannot issue a command to give our packets higher priority, then we can reduce the load to these intermediate nodes and send them less traffic.

Therefore, an abstract application for low latency streaming pursues two main goals:

- Latency less than 500 ms

- Maximum possible quality for the given latency

We achieve these goals using the below methods:

| Goals | |

| Latency less than 500 ms | Maximum possible quality |

|

|

The left column lists ways to reduce latency, while the right one – ways to keep the quality of the video high.

Hence, a video stream that needs low latency and high quality is not static, instead it should promptly react on network changes over time requesting to resend packets, reducing or increasing bitrate depending on the network condition at any given moment of time.

Here is a common question many people ask all over the Internet: ‘Can I stream a 720p video with 500 ms latency’. In general, this question does not make sense, because the resolution of 1280x720p looks completely different at the bitrate of 2 Mbps and the bitrate of 0.5 Mpbs. These are two pictures with highly varying quality. The first one is sharp and clear, while the second one will contain a lot of macroblocks.

A more correct version of this question would be: ‘Can I stream quality 720p video with 500 ms latency and 2 Mbps bitrate’. The answer is, yes, you can, as long as between you and the destination node there is a real dedicated 2 Mbps band (not the band proclaimed by the provider), that allows doing so. If there is not such a guaranteed band, the bitrate and quality of the picture will vary because of the attempts to fit the video to the the given latency with adjustment to the existing band occurring every second.

As you see, now the smiley is smiling, but it looks like it asks itself ‘Am I happy?’. Really, floating bitrate, resolution adjustment to the bandwidth and partial resending of packets are all compromises that do not allow to achieve near-zero latency and true Full HD quality in an arbitrary network. But such approach allows keeping quality close to the maximum at any given moment of time, and keep latency low at the same time.

WebRTC

The WebRTC technology is often criticized for being “raw” and “redundant”. However, if you dig deeper into specific of its implementation, you find out that the technology is design rather well and adequately does its job of delivery audio and video in real time with low latency.

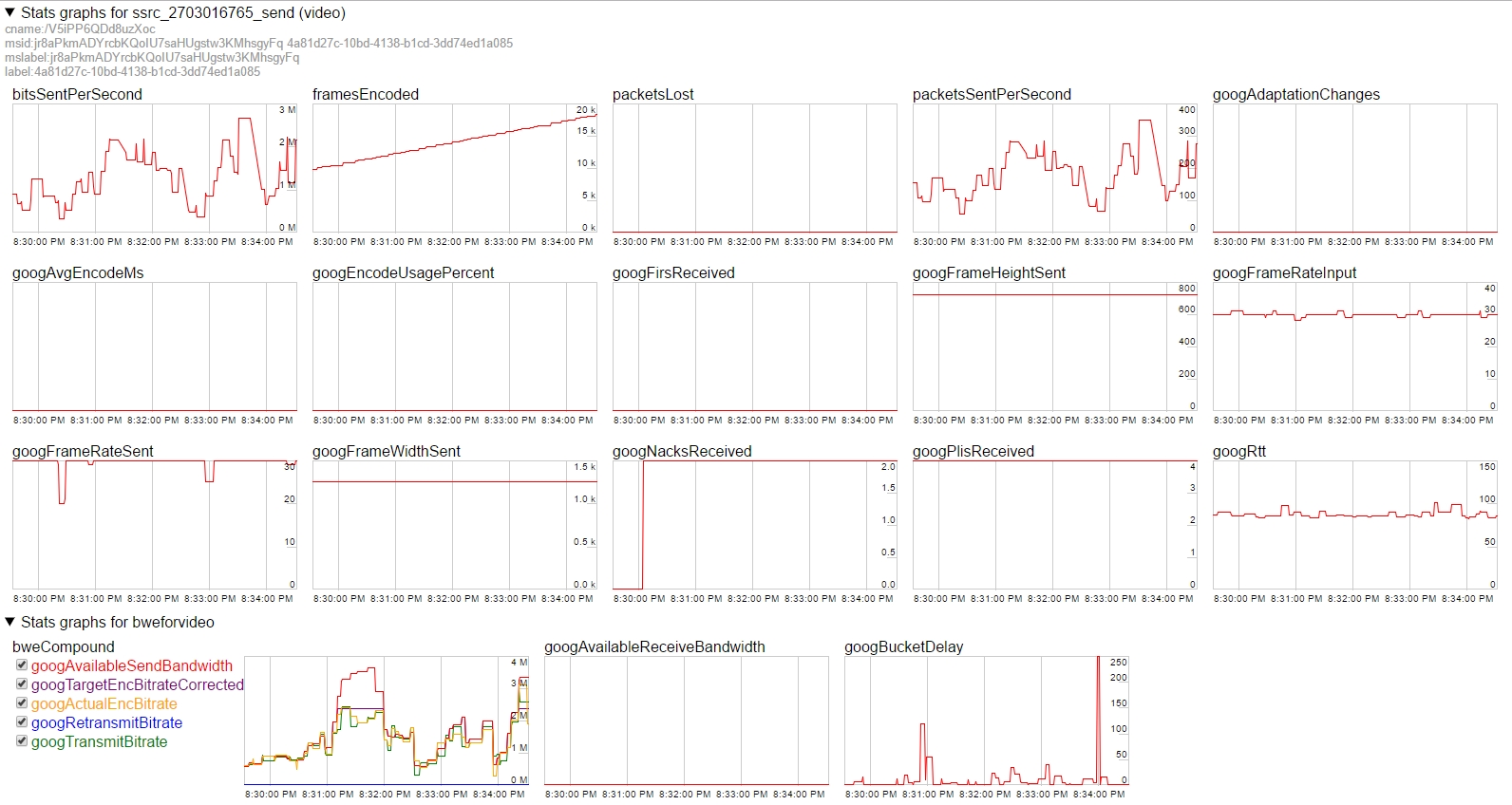

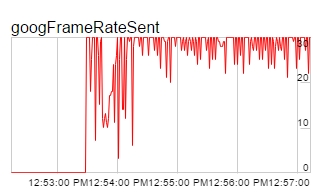

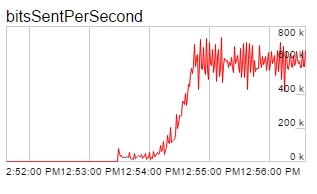

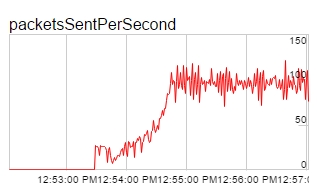

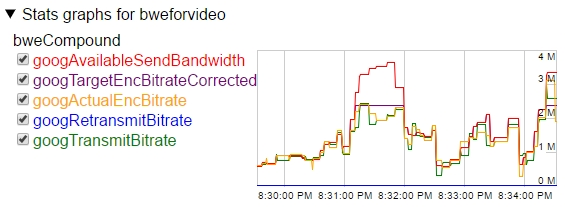

Above we mentioned that because of network performance may vary in wide range, keep latency low requires constant correction of such parameters of the stream as bitrate, FPS and resolution. All of this can be seen in the Chrome browser on the chrome://webrtc-internals tab.

It all starts with a web camera. Suppose, the camera is good and is capable of producing firm 30 FPS. Here is what happens to the real video stream then:

As you see from the diagram, even though the camera outputs the video at 30 FPS, the real framerate jumps up and down in the approximate range of 25-31 FPS and can even hit local minimums of 21-22 FPS.

Simultaneously, the bitrate and bps parameters drop too. Indeed, the less video is encoded, the less frames and packets should be sent, the less is the overall video stream transfer rate.

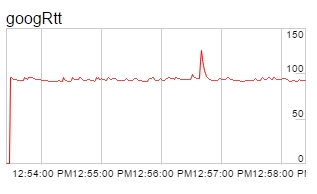

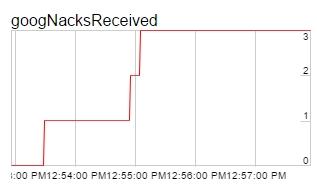

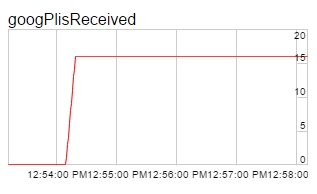

Auxiliary metrics include RTT, NACK and PLI that influence the browser behaviour (WebRTC) and the resulting bitrate and quality.

RTT means Round Trip Time, a “ping” to the recipient.

NACK is a lost packet message send by the recipient of the stream to the sender of that stream.

PLI is a message indicating a key frame has been lost and requesting to resend it.

Based on the amount of lost packets, resends, RTT, we can make certain conclusion about quality of the network at any single moment and dynamically adjust the quality of the video to adapt it to capabilities of each specific network. This is already done in WebRTC and is working.

Testing a 720p WebRTC video stream

Let’s first test broadcasting of a WebRTC stream at the resolution of (720p) and measure latency. We will test broadcasting using a WebRTC media server Web Call Server 5. The test server is located at Digitalocean host in the Frankfurt datacenter. Ping to the server is 90 ms. The Internet provider specified bandwidth of 50 Mbps.

Parameters of the test:

| Server | Web Call Server 5, DO, Frankfurt DC, ping 90ms, 2 core, 2Gb RAM |

| Video stream resolution | 1280×720 |

| System | Windows 8.1, Chrome 58 |

| Test | Echo test, sending a video to the server from one browser window and receiving in another one |

For this test we used a standard example media_devices.html, located here. The source code of this example can be found here.

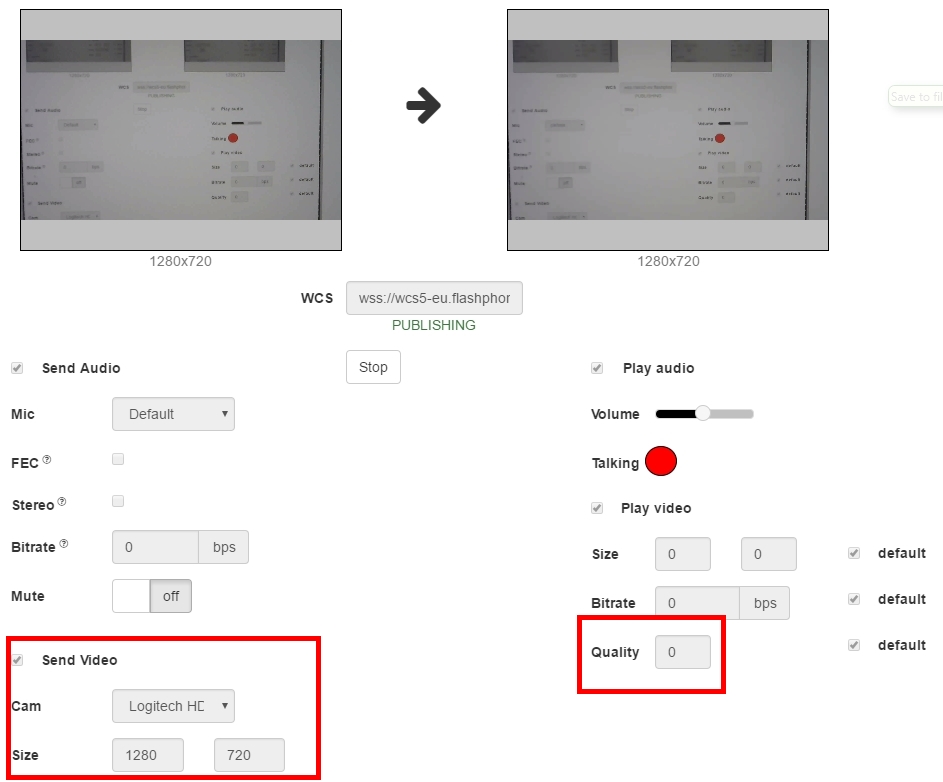

To set the resolution of the stream to 720p, select the camera and set 1280×720 in Size settings. Also, set the “Play Video” / “Quality” option to 0 to avoid transcoding.

So, we end up with a 720p video stream we send to the remote server and then play it in the right window. Meanwhile, the page displays the PUBLISHING status.

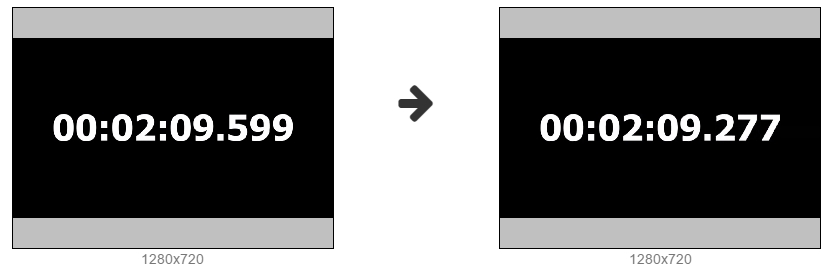

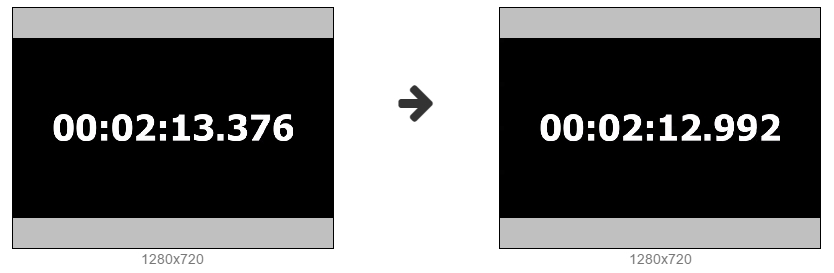

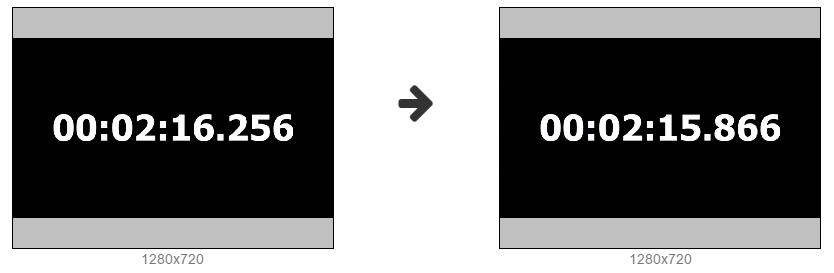

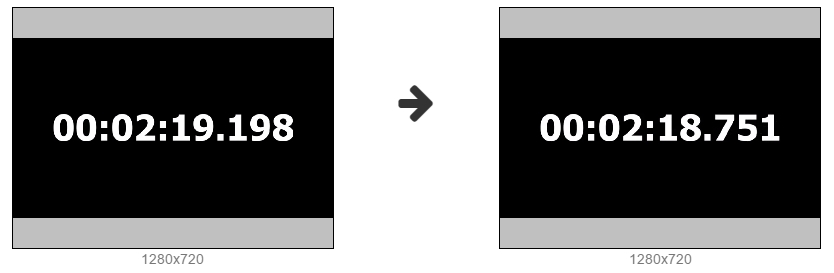

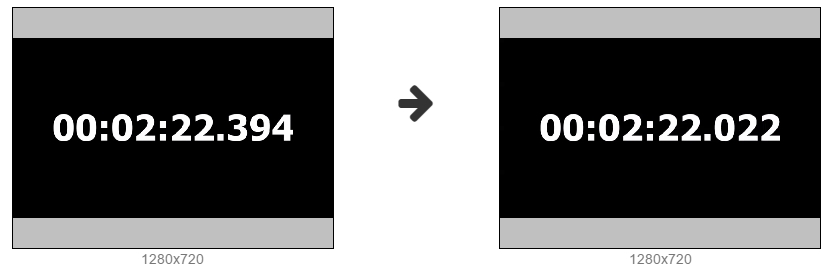

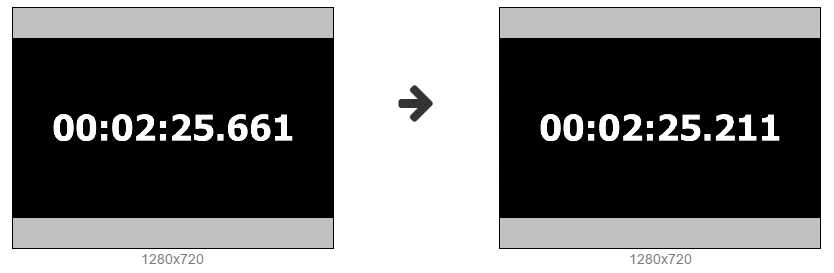

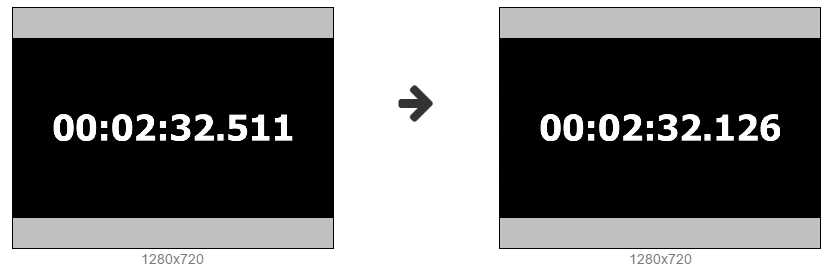

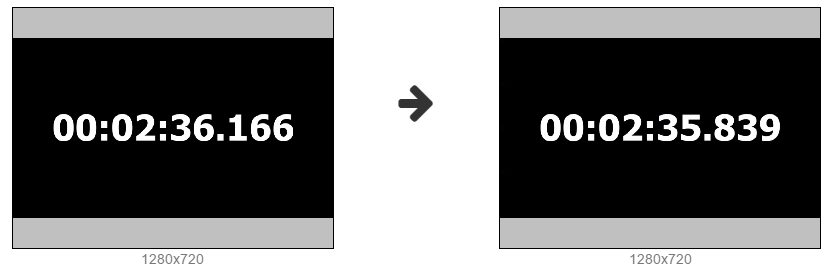

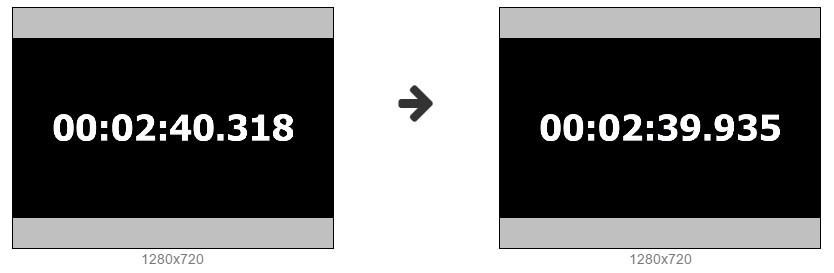

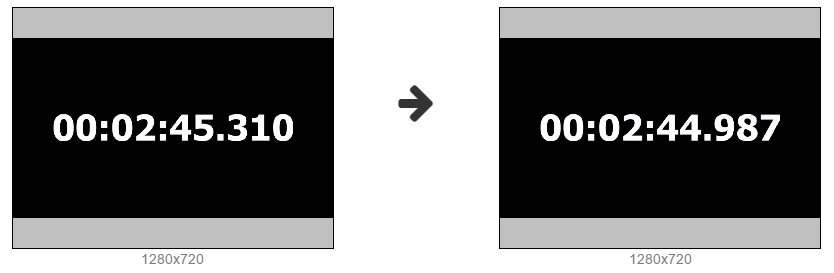

Then, we activate a millisecond timer from the virtual camera and take a few screenshots to measure a real latency.

Screenshot 1

Screenshot 2

Screenshot 3

Screenshot 4

Screenshot 5

Screenshot 6

Screenshot 7

Screenshot 8

Screenshot 9

Screenshot 10

Results of the test and latency

Finally, we have got the following table with measurements in milliseconds:

| Captured | Displayed | Latency | |

| 1 | 09599 | 09277 | 322 |

| 2 | 13376 | 12992 | 384 |

| 3 | 16256 | 15866 | 390 |

| 4 | 19198 | 18751 | 447 |

| 5 | 22394 | 22022 | 372 |

| 6 | 25661 | 25211 | 450 |

| 7 | 32511 | 32126 | 385 |

| 8 | 36166 | 35839 | 327 |

| 9 | 40318 | 39935 | 383 |

| 10 | 45310 | 44987 | 323 |

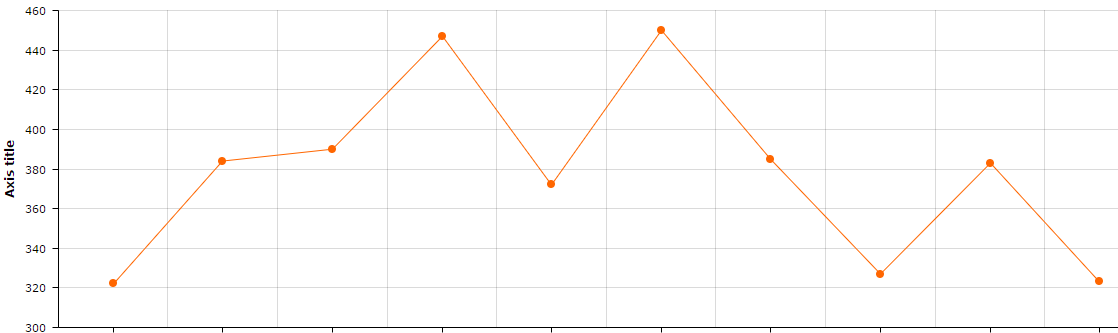

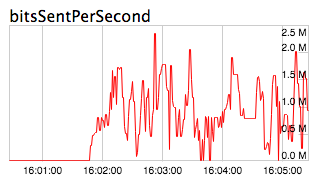

The latency graph for our 720p video stream in the one-minute test looks like this:

The test of 720p broadcasting demonstrated good results with visual latency of about 300-450 milliseconds.

Graphs of WebRTC bitrate

Now, let’s take a look at what happens to the video stream on the WebRTC level. To do this, we run a high-res animation instead of a timer to see how WebRTC will manage higher bitrates.

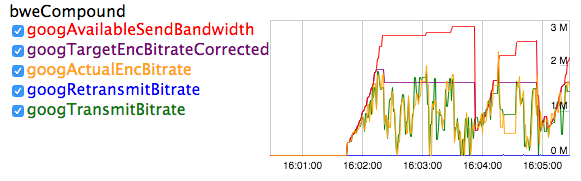

Below are graphs of this WebRTC broadcast.

From the graphs we can see that bitrate varies in the range of 1-2 Mbps. The reason is that the server automatically detects insufficient bandwidth of the channel and periodically requests Chrome to reduce the bitrate. The bitrate cap is red on this graph, googAvailableSendBandwidth, it changes dynamically. Green denotes the real transmitted bitrate googTransmitBitrate.

Here is how Congestion Control works on the server side. To avoid network congestion and lost packets, the server constantly makes corrections to the bitrate, and the browser obeys commands of the server to make such corrections to the bitrate.

At the same time, width and height graphs remain stable. The sent width is 1280, and height is 720p. That is, resolution does not change and the bitrate is managed only by reducing the encoding bitrate of the video.

CPU overuse detection

To keep resolution constant, for our tests we turned off the CPU detector (googCpuOveruseDetection) for the Google Chrome browser.

The CPU detector monitors CPU load and if some threshold is hit it initiates events that lead to the Chrome browser making resolution lower. By disabling this function, we allowed overuse of the CPU, but locked resolution.

mediaConnectionConstraints: {"mandatory": {googCpuOveruseDetection: false}}

If the CPU detector is enabled, graphs look smoother, but resolution of the video stream constantly goes up or down.

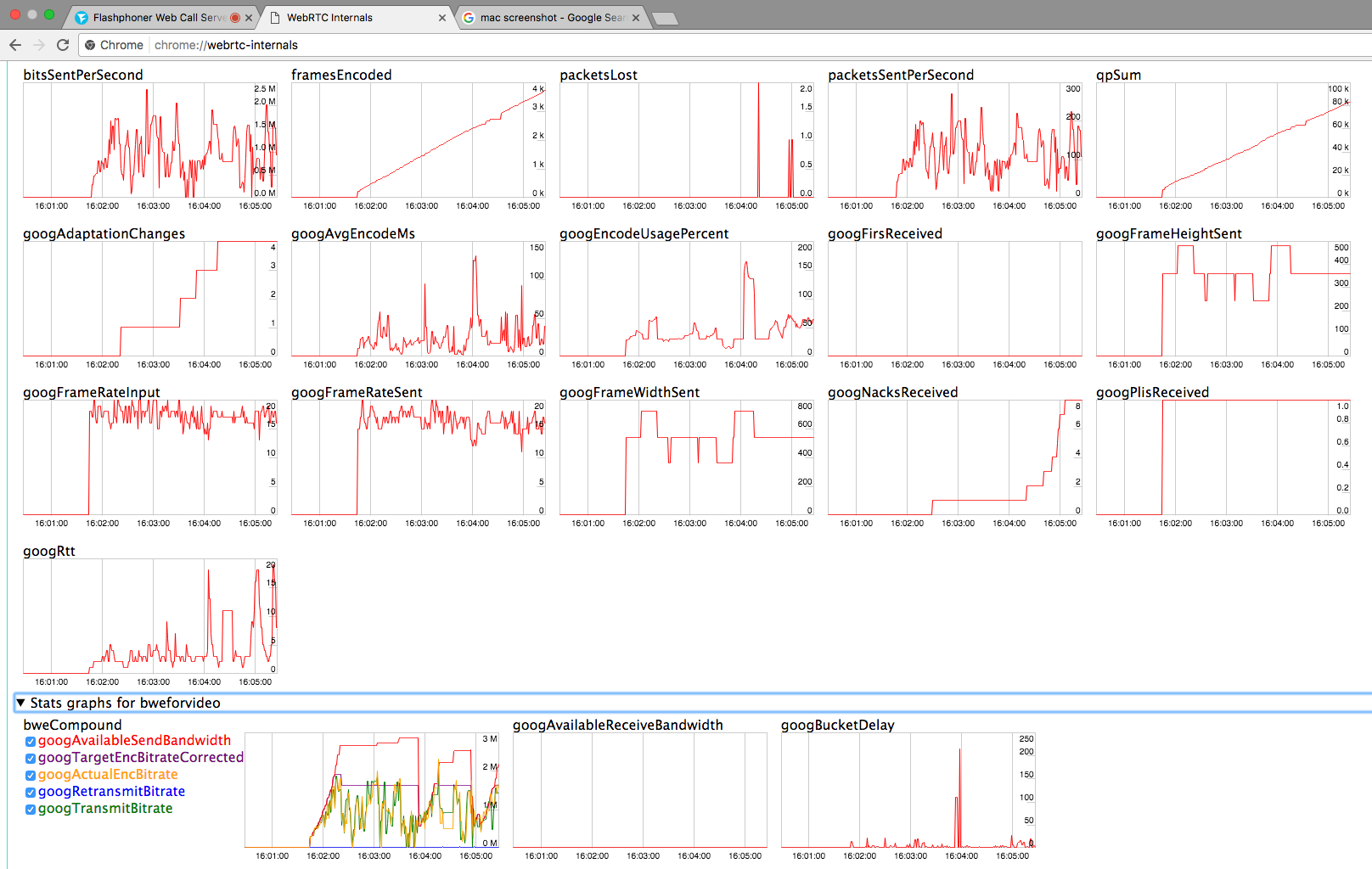

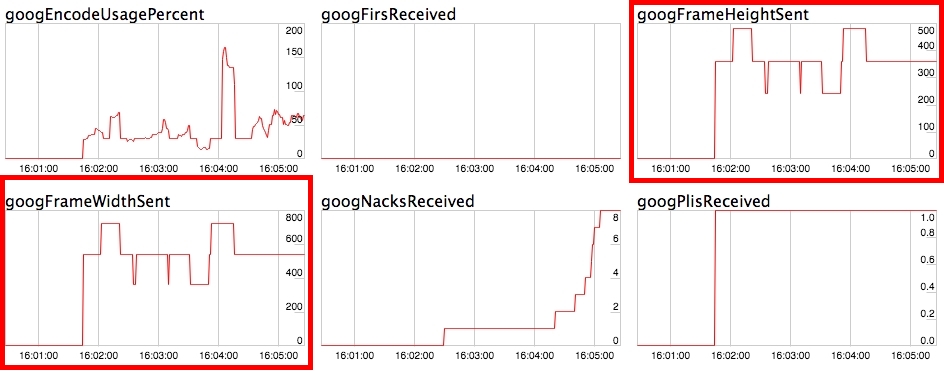

To test adaptation, we need an older computer. This would be Mac Mini 2011 equipped with core i5 1.7 Ghz and Chrome 58. We use the same animation for the test.

Note how in the very beginning of streaming resolution of the video dropped down to 540×480.

The resulting graphs:

Here, we can see how width and height of the picture change, hence resolution of the video is not constant:

And these graphs display what accompanied changes of width and height.

The googAdaptationChanges parameter shows the number of adaptations initiated by Chrome during streaming. The more adaptations happen, the more frequent are changes of resolution and bitrate during streaming of the video.

As for the bitratre, its graph is more sawtooth, even though the server never lowered the cap.

Such aggressive changes in the bitrate are caused by two things:

- Activation of adaptations googAdaptationChanges on the side of the Google Chrome browser, caused by increased load to the CPU.

- Usage of the H.264 codec that encodes differently than VP8 and can greatly reduce the encoding bitrate depending on the contents of a scene.

Conclusion

Here is what we did:

- We measured latency of a WebRTC broadcast via the remote server and measured its average value.

- We demonstrated the behaviour of the bitrate of a 720p video stream in the VP8 codec if CPU adaptations are disabled, and how the bitrate adapts to conditions of the network.

- We saw the bitrate cap set by the server.

- We tested a WebRTc broadcast on a client machine of lower performance, with enabled CPU adaptation and with the H.264 codec, we saw how resolution of the video stream is corrected dynamically.

- Also, we revealed COPU metrics that influence resolution and the amount of adaptations.

Hence, now we can answer a few questions implied in the beginning of this article:

Question: How to run a WebRTC broadcasting with minimum latency?

Answer: Just run it. Resolution and bitrate of the stream arer automatically adjusted to the values providing minimum latency. For example, if the resolution is set to 1280×720, the bitrate can go down to 1 Mbps, and the resolution to 950×540.

Question: What should I do to run a WebRTC broadcast with minimum latency and the stable resolution of 720p?

Answer: For that, user’s channel must have at least 1 Mbps of real bandwidth and CPU adaptations must be turned off. In this case the resolution will remain stable and adjustment will only involve the bitrate.

Quesiton: What will happen to a 720p video stream on the 200 kbps bandwidth?

Answer: You will receive a picture blurred with macroblocks and very low (like 10 or so) FPS. The latency will remain low, but quality of the video will be very bad.

References

Media Devices – the test example of a WebRTC broadcast that we used to test latency.

Source – the source code of the test broadcasting example.

Web Call Server – WebRTC server.

chrome://webrtc-internals – WebRTC graphs.