“Oh no, this HLS is killing me!” Igor said and poured some hot tea in a mug with thick walls. “Customers complain again about freezes, and we only made another release with corrections yesterday.”

Browser as a streamer and player

Today, browsers can capture video from a webcam, compress it with codecs, and send it to the network, i.e. function as a video encoder. Simply put, a modern browser contains an encoder module (encoder + streamer + decoder + player) that has a JavaScript API. This subsystem or library if you like, built in the browser, is called WebRTC – Web Real Time Communications.

Each browser may have nuances in implementing the WebRTC stack, which can lead to incompatibility with other browsers in some cases, but in general, everything works quite well.

Since the browser is both a streamer and a player, it can perform the following corresponding functions:

- send a video stream to the server and record it

- send a video stream and broadcast it

- send a p2p video stream

- send its own video stream and simultaneously play someone else’s (video chat or video conference)

- play a video stream from any source that is converted to WebRTC on the server side (for example, from an RTSP camera or RTMP stream)

This is all done using the JavaScript API. If you simplify the JavaScript code example as much as possible, it might look something like this:

//we capture and publish the stream to the server on one browser tab

session.createStream({name:”mystream”}).publish();

//we play the stream from the server on another browser tab

session.createStream({name:”mystream”}).play();

Where there’s no WebRTC, there is HLS

All seems to be well – we publish streams, play streams in browsers, including mobile, and even in iOS Safari. Everything works until there is a browser that does not support WebRTC, which is quite common today. As a rule, where WebRTC does not work well, HLS does. This is especially true on Apple devices like Apple TV consoles.

A completely natural need arises – to play streams in both HLS and WebRTC, i.e. provide the developer with a choice of how to play the user stream. And then it all depends on the source of the stream. The fact is that HLS was conceived many years ago mainly in order to play VOD and Live broadcasts. Back then, there were no serious requirements for real-time playing, delays, codecs, etc.

The HLS task was simple and clear – to deliver video to the device in good quality, and HLS copes with this function well to this day. The problem is that the HLS specification expects a strict and smooth video stream, while the encoder on the WebRTC side is designed to dynamically encode and change the bandwidth, FPS, and other parameters of the stream on the go for realtime needs.

If we de-packetize a WebRTC stream and simply convert it to HLS, this will work, but not everywhere. Namely, this may not work in the native HLS player in the browsers such as iOS Safari, Mac OS Safari, and Apple TV. Therefore, if you see freezes on an iPhone or Apple TV when working with the WebRTC>HLS converter, do not be alarmed. Perhaps this is it.

Why it happens: The native Apple player for HLS has its own idea of what the incoming stream should be like. Apple specs mention H.264 codec, AAC 128 kHz sampling rate, GOP 30, etc. Another part of the implicit requirements, which are not in the specifications, are embedded in the implementation of native players, and they can only be determined empirically. For example: The server must give HLS segments immediately after the m3u8 playlist is returned, the stream must have the same H.264 bitstream configuration, etc. If you miss something, you’ll get freezing.

Fighting freezing in native players

Thus, WebRTC depacketization and HLS packetization generally do not work. In the Web Call Server (WCS) streaming video server, we solve the problem in two ways, and we offer the third as an alternative:

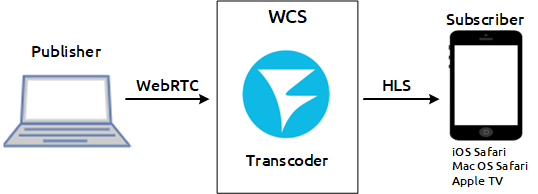

1) Transcoding.

This is the most reliable way to align a WebRTC stream to HLS requirements, set the desired GOP, FPS, etc. However, in some cases, transcoding is not a good solution; for example, transcoding 4k streams of VR video is indeed a bad idea. Such weighty streams are very expensive to transcode in terms of CPU time or GPU resources.

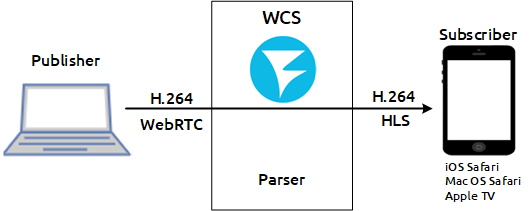

2) Adapting and aligning WebRTC flow on the go to match HLS requirements.

These are special parsers that analyze H.264 bitstream and adjust it to match the features/bugs of Apple’s native HLS players. Admittedly, non-native players like video.js and hls.js are more tolerant of streams with a dynamic bitrate and FPS running on WebRTC and do not slow down where the reference implementation of Apple HLS essentially results in freezing.

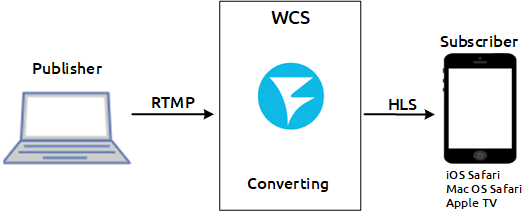

3) Using RTMP as the stream source instead of WebRTC.

Despite the fact that Flash player is already obsolete, the RTMP protocol is actively used for streaming; take OBS Studio, for example. We must acknowledge that RTMP encoders produce generally more even streams than WebRTC and therefore practically do not cause freezing in HLS, i.e. RTMP>HLS conversion looks much more suitable in terms of freezing, including in native HLS players. Therefore, if streaming is done using the desktop and OBS, then it is better to use it for conversion to HLS. If the source is the Chrome browser, then RTMP cannot be used without installing plugins, and only WebRTC works in this case.

All three methods described above have been tested and work, so you can choose based on the task.

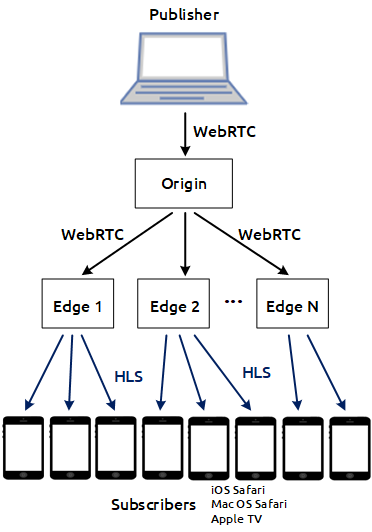

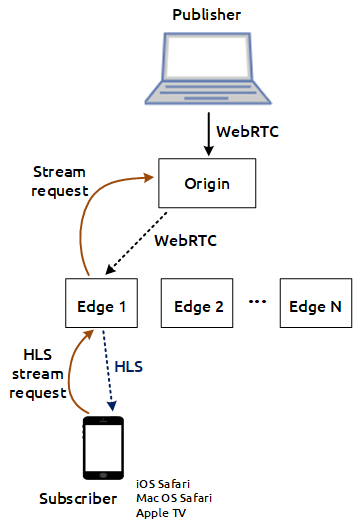

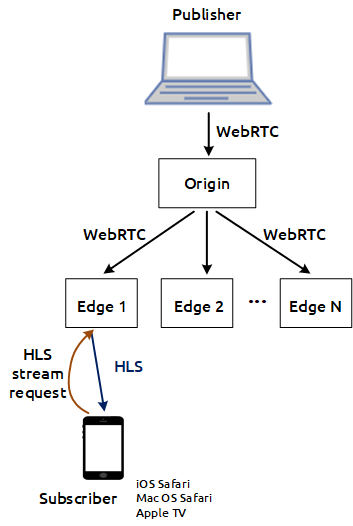

WebRTC to HLS on CDN

There are some undesirables you’re going to run into in a distributed system when there are several WebRTC stream delivery servers between the WebRTC stream source and the HLS player, namely CDN, in our case, based on a WCS server. It looks like this: There is Origin – a server that accepts WebRTC stream, and there is Edge – servers that distribute this stream including via HLS. There can be many servers, which enables horizontal scaling of the system. For example, 1000 HLS servers can be connected to one Origin server; in this case, system capacity scales 1000 times.

The problem has already been highlighter above; it usually arises in native players: iOS Safari, Mac OS Safari, and Apple TV. By native we mean a player that works with a direct indication of the playlist url in the tag, for example <video src=”https://host/test.m3u8″/>. As soon as the player requested a playlist – and this action is actually the first step in playing the HLS stream – the server must immediately, without delay, begin to send out HLS video segments. If the server does not start to send segments immediately, the player will decide that it has been cheated and stop playing. This behavior is typical of Apple’s native HLS players, but we can’t just tell users “please do not use iPhone Mac и Apple TV to play HLS streams.”

So, when you try to play a HLS stream on the Edge server, the server should immediately start returning segments, but how is it supposed do it if it doesn’t have a stream? Indeed, when you try to play it, there is no stream on this server. CDN logic works on the principle of Lazy Loading – it won’t load the stream to the server until someone requests this stream on this server. There is a problem of the first connected user; the first one who requested the HLS stream from the Edge server and had the imprudence to do this from the default Apple player will get freezing for the reason that it will take some time to order this stream from the Origin server, get it on Edge, and begin HLS slicing. Even if it takes three seconds, this will not help. It will freeze.

Here we have two possible solutions: one is OK, and the other is less so. One could abandon the Lazy Loading approach in the CDN and send traffic to all nodes, regardless of whether there have viewers or not. A solution, possibly suitable for those who are not limited in traffic and computing resources. Origin will send traffic to all Edge servers, as a result of which, all servers and the network between them will be constantly loaded. Perhaps this scheme would be suitable only for some specific solutions with a small number of incoming flows. When replicating a large number of streams, such a scheme will be clearly inefficient in terms of resources. And if you recall that we are only solving the “problem of the first connected user from the native browser,” then it becomes clear that it is not worth it.

The second option is more elegant, but it is also merely an end-around. We give the first connected user a video picture, but this is still not the stream that they want to see – this is a preloader. Since we must give them something already and do it immediately, but we don’t have the source stream (it is still being ordered and delivered from Origin), we decide to ask the client to wait a bit and show them a video of the preloader with moving animation. The user waits a few seconds while the preloader spins, and when the real stream finally comes, the user starts getting the real stream. As a result, the first user will see the preloader, and those who connect after that will finally see the regular HLS stream coming from the CDN operating on the principle of Lazy Loading. Thus, the engineering problem has been solved.

But not yet fully solved

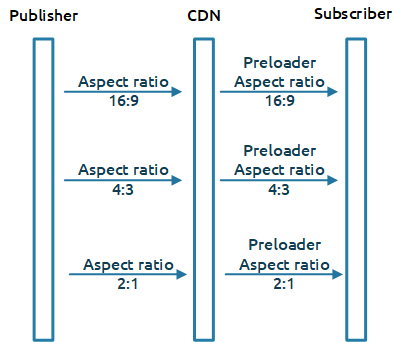

It would seem that everything works well. The CDN is functioning, the HLS streams are loaded from the Edge servers, and the issue of the first connected user is solved. And here is another pitfall – we give the preloader in a fixed aspect ratio of 16:9, while streams of any formats can enter the CDN: 16:9, 4:3, 2:1 (VR video). And this is a problem, because if you send a preloader in 16:9 format to the player, and the ordered stream is 4:3, then the native player will once again face freezing.

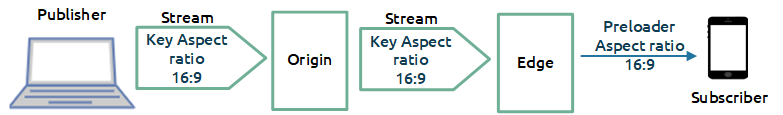

Therefore, a new task arises – you need to know with what aspect ratio the stream enters the CDN and give the same ratio to the preloader. A feature of WebRTC streams is the preservation of aspect ratio when changing resolution and transcoding – if the browser decides to lower the resolution, it lowers it in the same ratio. If the server decides to transcode the stream, it maintains the aspect ratio in the same proportion. Therefore, it makes sense that if we want to show the preloader for HLS, we show it in the same aspect ratio in which the stream enters.

The CDN works as follows: when traffic enters the Origin server, it informs other servers on the network, including Edge servers, about the new stream. The problem is that at this point, the resolution of the source stream may not yet be known. The resolution is carried by H.264 bitstream configs along with the key frame. Therefore, the Edge server may receive information about a stream, but will not know about its resolution and aspect ratio, which will not allow it to correctly generate the preloader. In this regard, it is necessary to signal the presence of the stream in the CDN only if there is a key frame – this is guaranteed to give the Edge server size information and allow the correct preloader to be generated to prevent “first connected viewer issue.”

Summary

Converting WebRTC to HLS generally results in freezing when played in default Apple players. The problem is solved by analyzing and adjusting the H.264 bitstream to Apple’s HLS requirements, either by ranscoding, or migrating to the RTMP protocol and encoder as a stream source. In a distributed network with Lazy Loading of streams, there is the problem of the first connected viewer, which is solved using the preloader and determining the resolution on the Origin server side – the entry point of the stream in the CDN.