Media Source Extensions (MSE) is a browser API that allows playing audio and video using the corresponding HTML5 tags: audio and video

In order to play a chunk of audio or video, we need to feed this chunk the corresponding element using MSE API. It is MSE that HLS players are based on. HLS fragments are passed to MSE and played by the player.

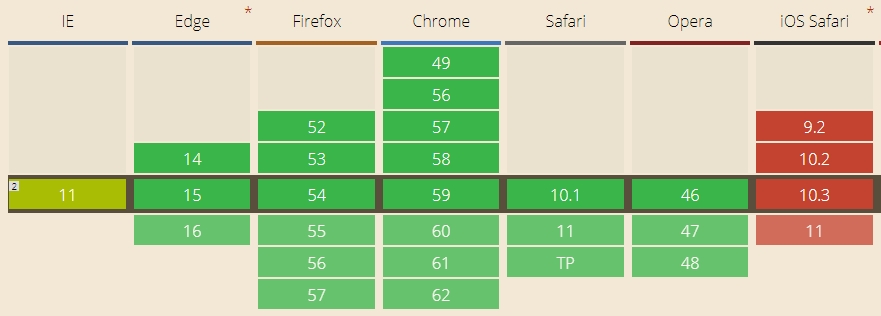

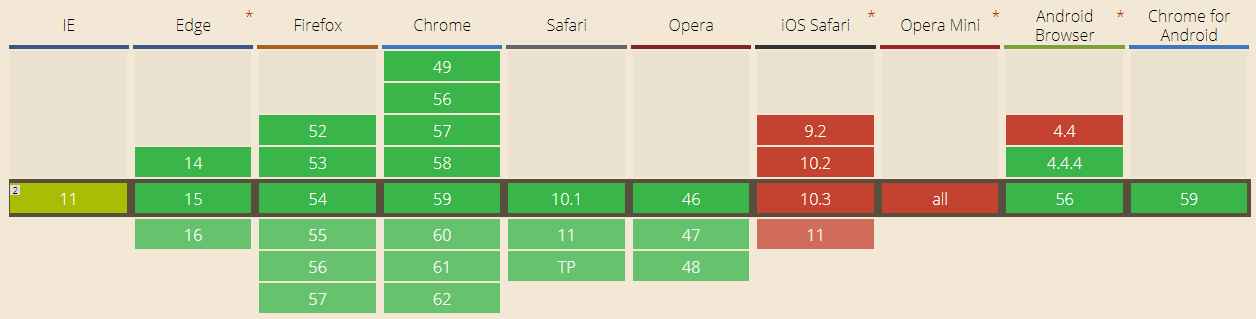

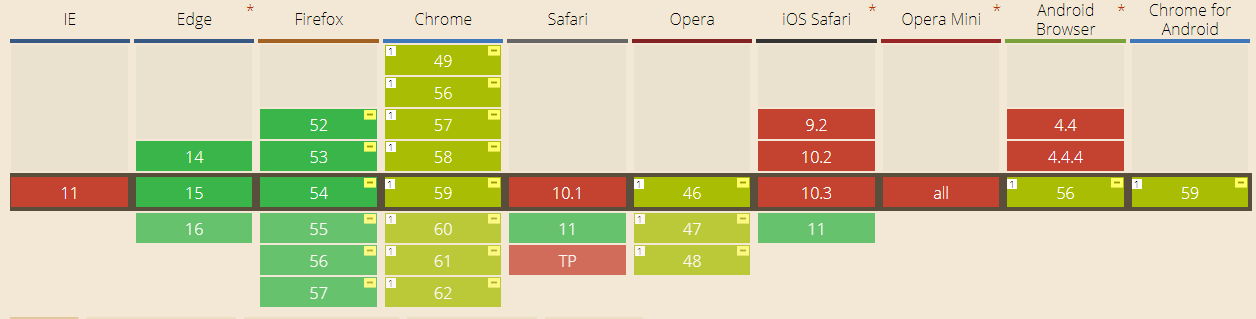

Let’s take a closer look at the Can I Use spreadsheet.

As you can see, MSE is supported in all latest browsers except for iOS Safari and Opera Mini. It is very similar to WebRTC in that.

MSE and WebRTC are technologies playing in totally different leagues. Roughly speaking, MSE is just a player, while WebRTC is a player, a streamer, and phone calls (real-time low latency streaming).

Hence, if you need just a player and don’t require real time connection (less than one second latency), MSE is a good choice to play video streams. But if you need real time without plugins, you have no other options rather than WebRTC.

|

Media Source Extensions |

WebRTC |

| Player only | Player and streamer |

| Medium latency | Real time (less than 1 second latency) |

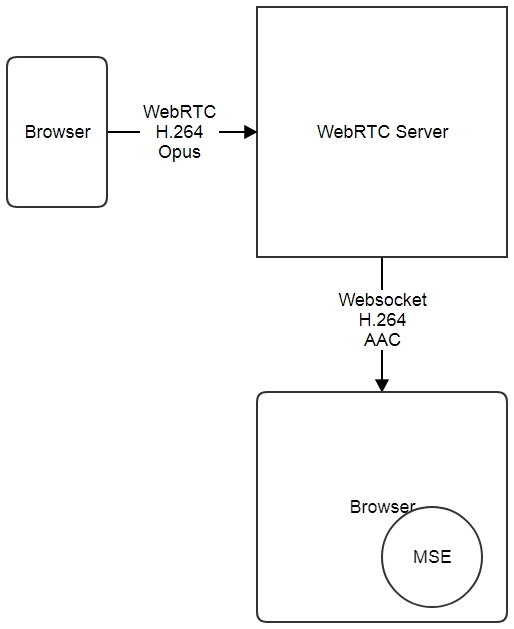

WebRTC to MSE

Let’s suppose, WebRTC works as a streamer. This means a browser or a mobile application sends a video stream to the server.

The fastest way to fetch a video stream to MSE is to connect to the server via Websocket and deliver it to the browser. Then, the player should decompose the received stream and send to MSE for playback.

The broadcasting flowchart is:

- The broadcasting browser sends a WebRTC stream to the server in H.264+Opus

- The WebRTC server broadcasts the stream via Websocket H.264+AAC

- A viewer’s browser opens the stream and sends H.264 and AAC frames for playback to MSE.

- The player plays audio and video.

Therefore, when Media Source Extensions is used as a player, the video part of a WebRTC stream encoded to H.264 comes to the player without transcoding which results in lower CPU usage on the server.

The Opus audio codec is transcoded to AAC for easy read by MSE, but audio transcoding takes far less resources than video.

Examples

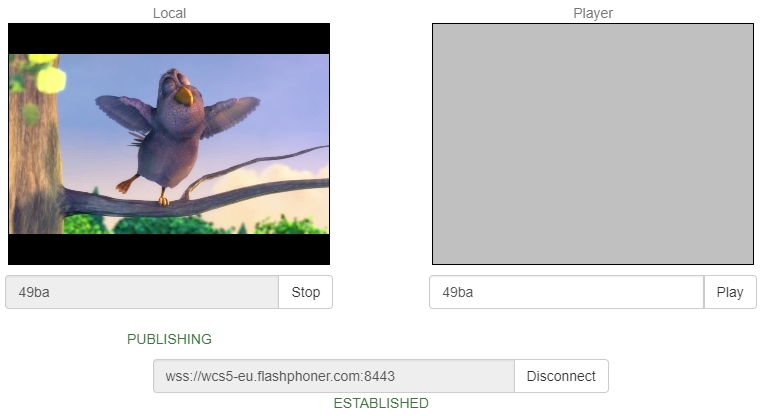

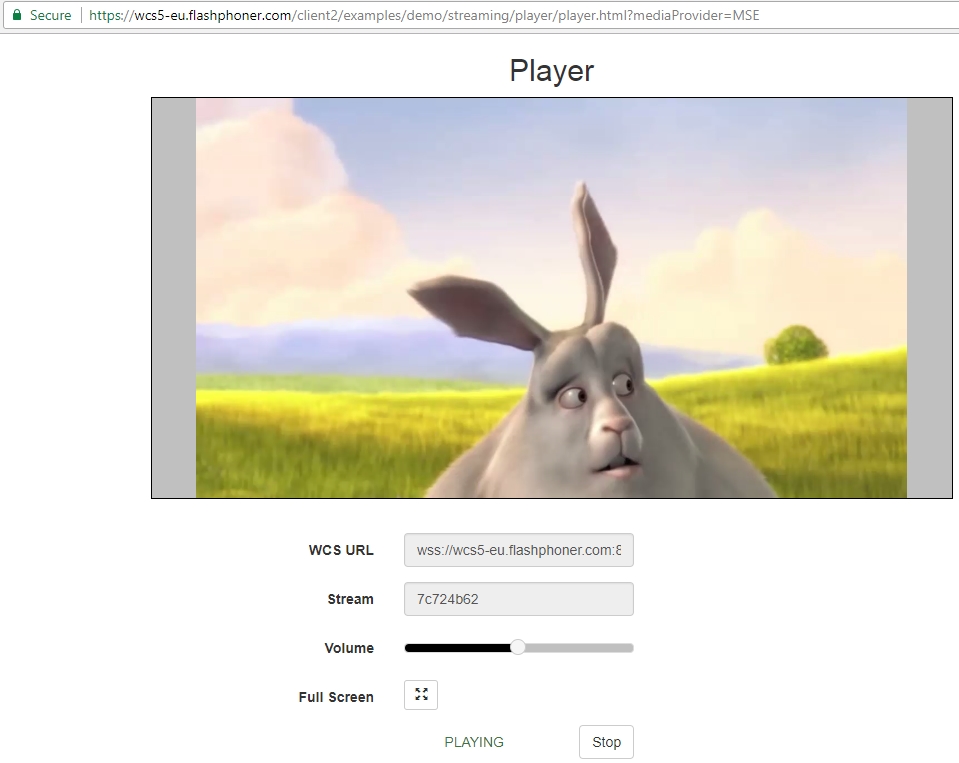

The below examples of the streamer and the MSE player use Web Call Server 5 as a server that converts a WebRTC video stream to the format applicable in Media Source Extension.

As a streamer we use the Two Way Streaming we application that sends the WebRTC video stream to the server.

As a player we use the test player with the support for Websockets and MSE.

The code of the MSE player works via the high-level API.

Create a div element on the HTML page:

Then, run playback of the video stream.

Send the div element as the value of the display parameter and the MSE player will be embedded to this div block.

session.createStream({name:"stream22",display:document.getElementById("myMSE")}).play();

The same way publishing of a WebRTC stream from another page works.

The stream is assigned with a unique name, stream22, that is specified during playback.

session.createStream({name:"stream22",display:document.getElementById("myWebCam")}).publish();

The full source of the streamer and the player can be found at github.

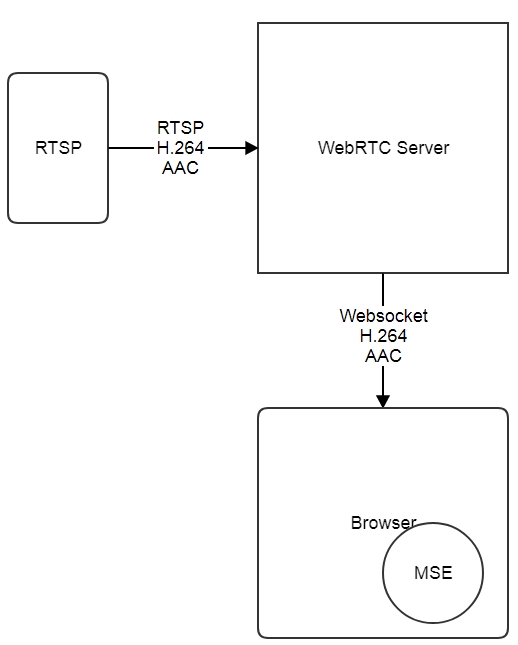

RTSP to MSE

One more case of using MSE over Websockets is playing video from an IP camera or any other system that publishes a video stream via RTSP.

An IP camera usually provides native support for H.264 and AAC codecs, so codecs are completely the same as ones used in MSE. This makes it possible to avoid transcoding and save CPU resources.

The broadcasting flowchart is as follows:

- The browser requests an RTSP stream.

- The server establishes connection to the camera and requests this stream from the camera via RTSP.

- The camera sends the RTSP stream. Streaming starts.

- The RTSP stream is converted to Websockets on the server side and goes to the browser.

- The browser sends the stream to the MSE player for playback.

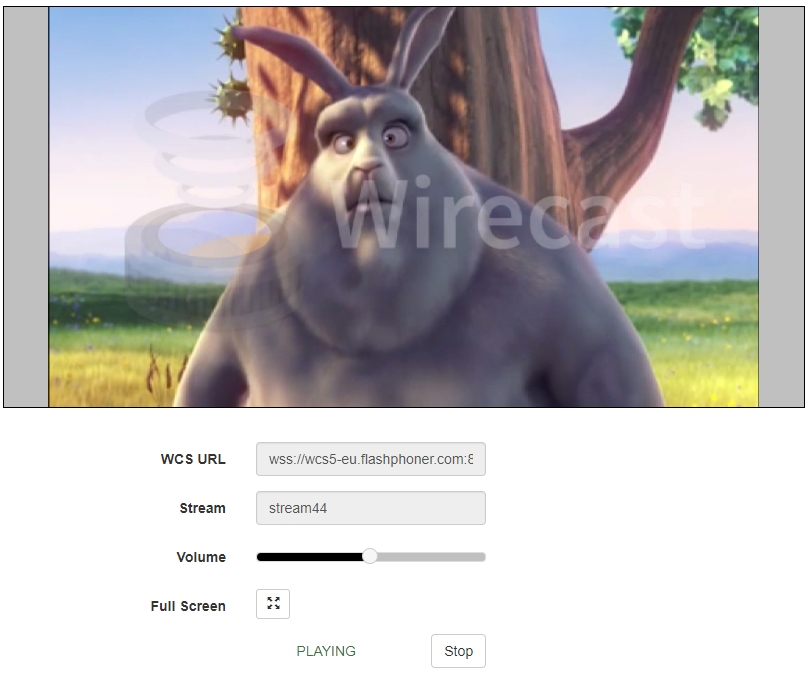

We use the same player shown above to work with the RTSP stream. We simply provide a full URL of the RTSP stream instead of a name.

By the time we write this article, the MSE player was tested in Chrome, Firefox, Safari.

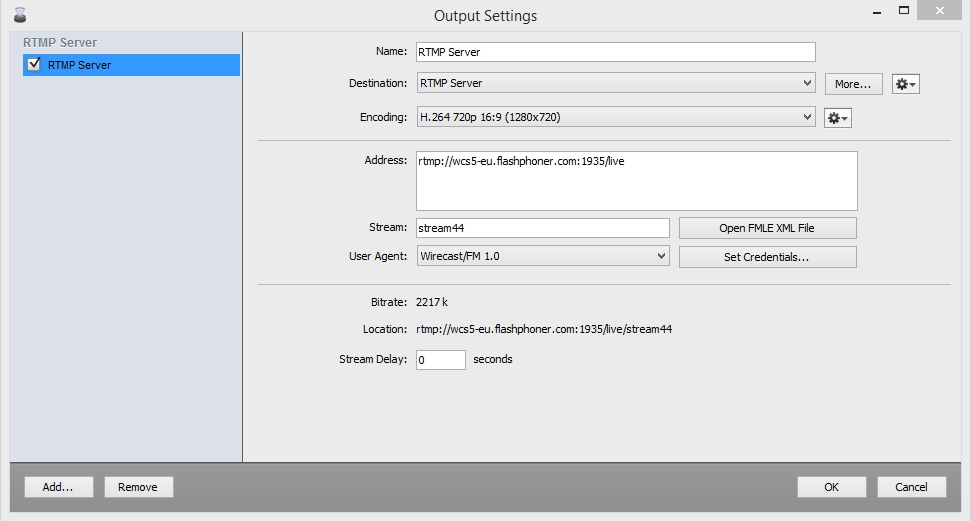

RTMP to MSE

The RTMP protocol is a de facto standard of delivering live content from a user to CDN.

Therefore we need to make sure the standard RTMP stream with H.264 and AAC codecs will play correctly in Media Source Extensions.

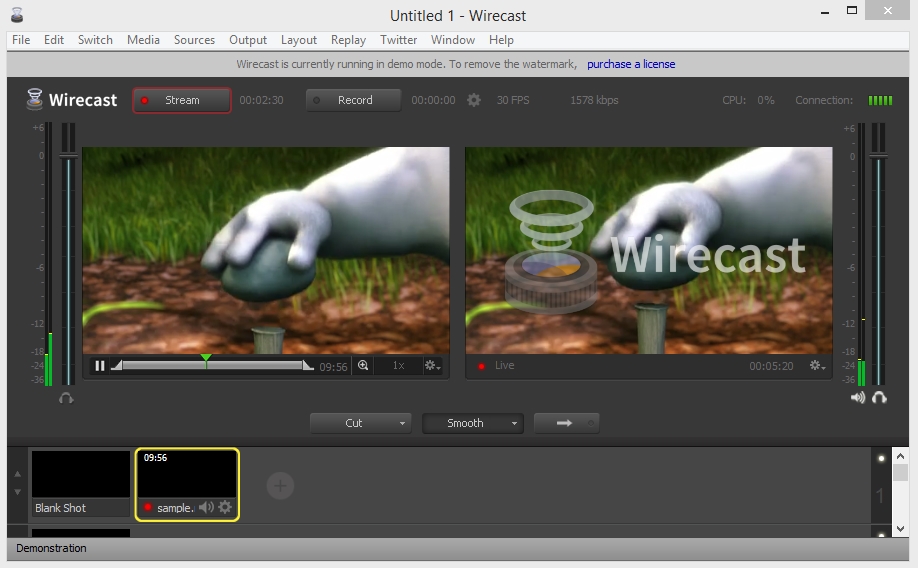

We can test this using a coder like Wirecast (you can also use FMLE, ffmpeg, OBS encoder or others).

- Open Wirecast and the Output menu. Specify the address of the server and the name of the RTMP stream, like this: stream44.

- Start streaming to the server.

- Play the stream in the MSE player.

Comparison with other playback technologies

Now we will take a look at MSE in comparison to other technologies of playing video in a browser.

We compare these 4 technologies in particular:

- WebRTC

- Flash

- HLS

- Canvas + Web Audio

|

MSE |

WebRTC |

Flash |

HLS |

Canvas |

|

| Supports Chrome, Firefox |

Yes |

Yes |

Yes |

Yes |

Yes |

| Supports iOS Safari |

No |

No |

No |

Yes |

Yes |

| Supports Mac Safari |

Yes |

No |

Yes |

Yes |

Yes |

| Latency |

3 |

0.5 |

3 |

20 |

3 |

| Full HD |

Yes |

Yes |

Yes |

Yes |

No |

Hence, if you want to play a live stream in a browser with low latency, MSE is a good solution that replaces Flash in the latest browsers.

If latency is not critical at all, HLS is solution of choice, because of its versatility. However, if low latency is required, you should use a combination of WebRTC, Flash, Canvas, MSE to cover the majority of browsers. Finally, if requirements to latency are extremely strict, like less than 1 second, the only choices left are WebRTC and Flash RTMFP.

References

Two Way Streaming – a demo broadcast of a WebRTC stream to the server

Source – the source code of the WebRTC stream broadcast

Player – a demo player with Media Source Extension support

Source – the source code of the player with MSE support

Web Call Server 5 – the server that supports WebRTC and RTSP broadcasts that supports converting stream for Media Source Extensions

Related articles

Embedding a WebRTC player for live broadcasts to a website

Related features

Video broadcasting from the web camera of a browser or mobile device

Low-latency broadcasting of a WebRTC video stream to iOS Safari via the Websockets protocol