April 2016 brought a surprising press-release that Apple rolls support for WebRTC in Safari browsers for Mac OS and iOS. It’s been a year since the press-release, and Apple is still rolling WebRTC to Safari. Stay tuned.

However. some of us can’t stay. Some need real-time video in Safari right now, and this article is going to tell how you can go without WebRTC in iOS Safari and Mac OS Safari, and what you can replace it with.

Today, we know of the following candidates:

- HLS

- Flash

- Websockets

- WebRTC Plugin

- iOS native app

Since we are looking an alternative to RTC (Real Time Communication), we should make a comparison not only by supported platforms iOS / Mac OS, but also by latency in seconds.

| iOS | Mac OS | Latency | |

| HLS, DASH | Yes | Yes | 15 |

| Flash RTMP | No | Yes | 3 |

| Flash RTMFP | No | Yes | 1 |

| Websockets | Yes | Yes | 3 |

| WebRTC Plugin | No | Yes | 0.5 |

HLS

As seen from the table, HLS is out of the question with its huge 15 seconds of latency even though it works on both platforms perfectly.

Flash RTMP

In spite of being “dead”, Flash continues working on Mac OS and displays an acceptable latency. But on Safari Flash is doing bad indeed. Sometimes Flash can be turned off in the browser completely.

Flash RTMFP

The same as Flash RTMP, except it works via UDP and can skip packets which is definitely better for real time playback. Good overall latency too. Does not work on iOS.

Websockets

An alternative to HLS, if the goal is low latency. Works on iOS and Mac OS.

In this case a video stream goes through Websockets (RFC6455), decodes on the JavaScript level and is rendered on the HTML5 canvas with WebGL. This option is way faster than HLS, but with its drawbacks:

- One-way delivery. You can play a stream in real time, but cannot capture a video from the camera and send to the server.

- Limited resolution. At 800×400 and higher, a powerful CPU of the latest iPhone or iPad is required for smooth decoding of such a stream on JavaScript. With iPhone5 and iPhone6, the resolution will probably never get higher than 640×480 while remaining smooth.

WebRTC Plugin

In Mac OS, you can install a WebRTC plugin to implement the WebRTC standard. Surely, this results in the best latency, but requires downloading and installing a third-part software by a user. Although, all fairness Adobe Flash Player is a plugin too and may require manual installing as well, but the “dead” Flash by Adobe is certainly more popular than Noname WebRTC plugins. Besides, WebRTC plugins do not work in iOS Safari.

Other alternatives to WebRTC for iOS

If we move beyond the Safari browser, there are other options as well:

iOS native app

We can implement an iOS application with WebRTC support and receive low latency and all the power of WebRTC. Not a browser. Requires installing from App Store.

Browser

A browser with WebRTC support for iOS. They say it does support WebRTC, but we didn’t test it. Not a very popular browser anyway. However, if you have means to make users install it, you can go with it.

Ericsson

Same as Bowser. It is not clear, whether it works good with WebRTC. Not very popular on iOS.

Wait a little bit more

You can wait for Apple to embed WebRTC. One year has passed. Possibly, the solution is already at the edge of releasing, who knows. May be you’ve got some inside information?

Websockets as a replacement for WebRTC on iOS

From the above table it is evident, that only two options remain for iOS Safari: HLS and Websockets. The first one has 15 seconds latency. The second has limitations and about 3 seconds latency. Also, there’s MPEG DASH, but it is the same HLS / HTTP in terms of real time.

Due to limitations:

- JavaScript decoding and support for low resolutions

- One-way streaming (playback only)

Websockets surely cannot pretend to be a full-functional replacement to WebRTC in the iOS Safari browser. But they at least allow to play real time video streams right now.

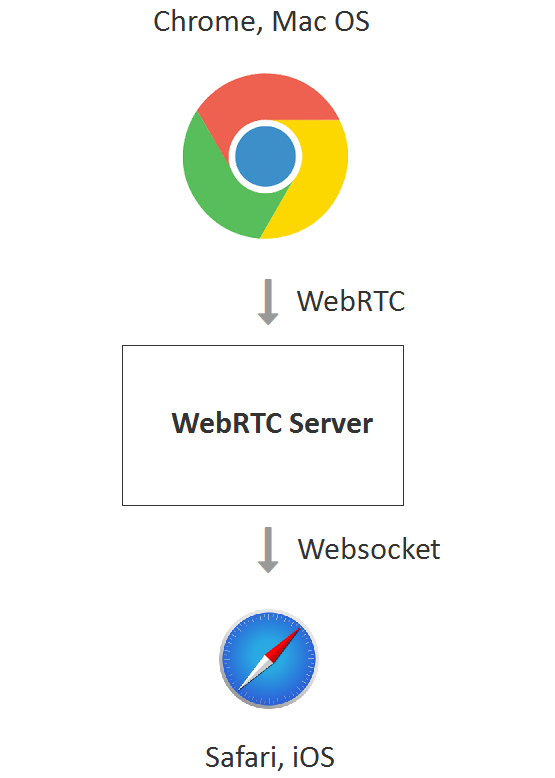

In this case, the real time broadcasting looks as follows:

- Send a WebRTC video stream, for example, from Mac OS or Win version of the Chrome browser to the WebRTC server that supports converting the stream to Websockets.

- The WebRTC server converts the stream to MPEG + G.711 and envelopes it to the Websockets transport protocol.

- iOS Safari establishes a connection to the server via Websockets and fetches the video stream. Then it unpacks the stream and decodes audio and video. Audio is played using Web Audio API, and video is rendered to Canvas using WebGL.

Testing playback via Websockets in iOS Safari

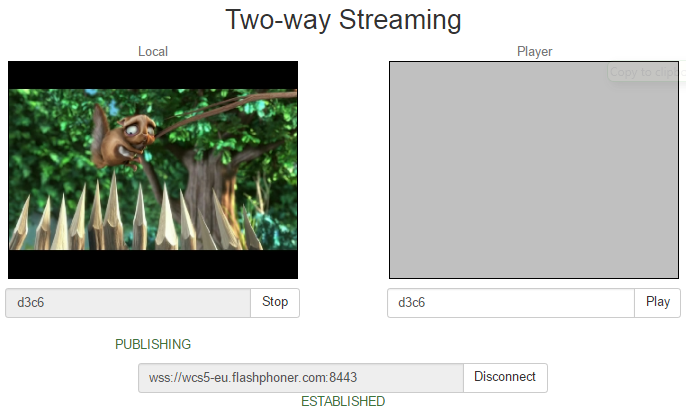

As a server for the test we use Web Call Server 5 that supports the required conversion and can publish the stream to iOS Safari via Websockets. The source for the real time video is a web camera that sends the video to the server or an IP camera that works via RTSP.

Here is how sending of a real time WebRTC video stream to the server looks in the desktop Google Chrome browser:

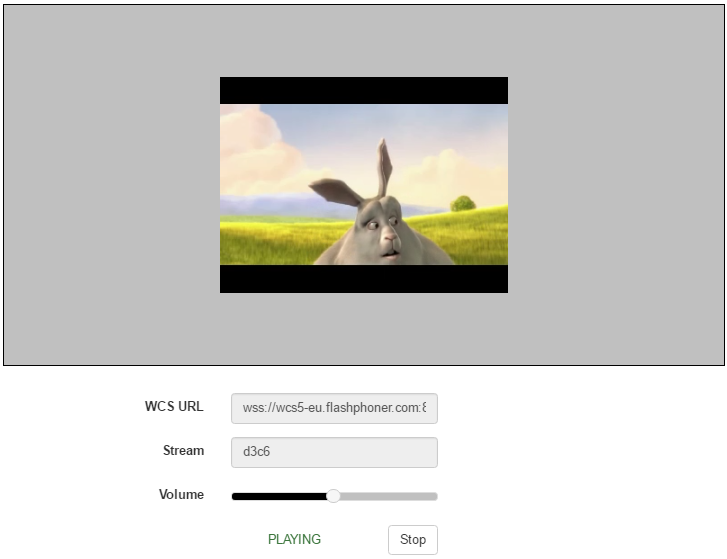

And here is how the same video stream is played in real time in the iOS Safari browser:

Here we specified d3c6 as a name for the video stream sent from Chrome via WebRTC.

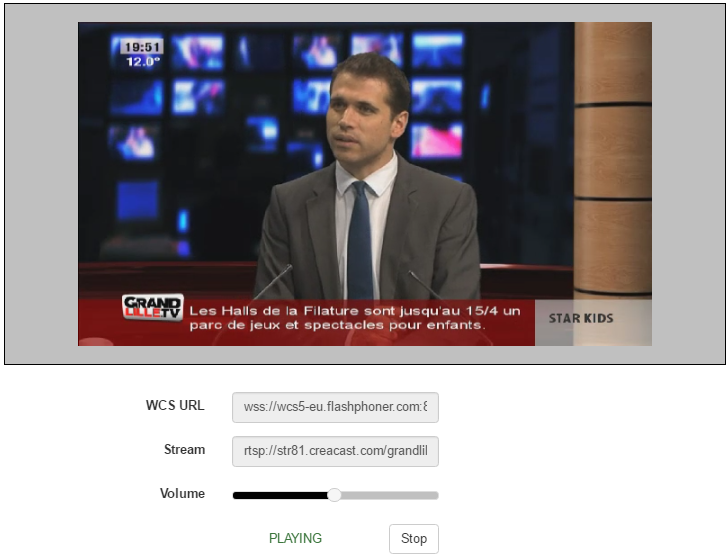

If the source of the video stream was an IP camera, this would look as follows in iOS Safari:

As you see from the screenshot, the name of the stream is now the RTSP address. The server fetched the RTSP stream and converted it to Websockets for iOS Safari.

Integrating a player for iOS Safari

to the web page

The source code of the player is available here. However, the player at the link works not only in iOS Safari. It can switch between three technologies: WebRTC, Flash, Websockets in that exact priority, and contains a little bit more code than is required to just play the stream in iOS Safari.

Let’s try to minimize the code of the player to create a minimum viable configuration to do playback in iOS Safari

The minimum HTML code of the page looks like this: player-ios-safari.html

<html>

<head>

<script language="javascript" src="https://flashphoner.com/downloads/builds/flashphoner_client/wcs_api-2.0/current/flashphoner.js"></script>

<script language="javascript" src="player-ios-safari.js"></script>

</head>

<body onLoad="init_page()">

<h1>The player</h1>

<div id="remoteVideo" style="width:320px;height:240px;border: 1px solid"></div>

<input type="button" value="start" onClick="start()"/>

<p id="status"></p>

</body>

</html>

From the code above it is evident that the main element on the page is the div block.

It is this block that runs the playback of the video after API scripts put HTML5 Canvas into it.

Then, there is a script of the player. Total length is 80 lines: player-ios-safari.js

var SESSION_STATUS = Flashphoner.constants.SESSION_STATUS;

var STREAM_STATUS = Flashphoner.constants.STREAM_STATUS;

var remoteVideo;

var stream;

function init_page() {

//init api

try {

Flashphoner.init({

receiverLocation: '../../dependencies/websocket-player/WSReceiver2.js',

decoderLocation: '../../dependencies/websocket-player/video-worker2.js'

});

} catch(e) {

return;

}

//video display

remoteVideo = document.getElementById("remoteVideo");

onStopped();

}

function onStarted(stream) {

//on playback start

}

function onStopped() {

//on playback stop

}

function start() {

Flashphoner.playFirstSound();

var url = "wss://wcs5-eu.flashphoner.com:8443";

//create session

console.log("Create new session with url " + url);

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function(session){

setStatus(session.status());

//session connected, start playback

playStream(session);

}).on(SESSION_STATUS.DISCONNECTED, function(){

setStatus(SESSION_STATUS.DISCONNECTED);

onStopped();

}).on(SESSION_STATUS.FAILED, function(){

setStatus(SESSION_STATUS.FAILED);

onStopped();

});

}

function playStream(session) {

var streamName = "12345";

var options = {

name: streamName,

display: remoteVideo

};

options.playWidth = 640;

options.playHeight = 480;

stream = session.createStream(options).on(STREAM_STATUS.PLAYING, function(stream) {

setStatus(stream.status());

onStarted(stream);

}).on(STREAM_STATUS.STOPPED, function() {

setStatus(STREAM_STATUS.STOPPED);

onStopped();

}).on(STREAM_STATUS.FAILED, function() {

setStatus(STREAM_STATUS.FAILED);

onStopped();

});

stream.play();

}

//show connection or remote stream status

function setStatus(status) {

//display stream status

}

One of the most important parts of this script is API initialization

Flashphoner.init({

receiverLocation: '../../dependencies/websocket-player/WSReceiver2.js',

decoderLocation: '../../dependencies/websocket-player/video-worker2.js'

});

Upon initialization, two more scripts are loaded:

- WSReceiver2.js

- video-worker2.js

These scripts are the core of a Websockets player. The first one is in charge for delivery of a video stream, and the second one processes it. The flashphoner.js, WSReceiver2.js and video-worker2.js scripts are available for download in the Web SDK build for Web Call Server and should be obligatory included to play a video stream in iOS Safari.

Therefore, the following scripts are obligatory:

- flashphoner.js

- WSReceiver2.js

- video-worker2.js

- player-ios-safari.js

Connection to the server is established with this code:

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function(session){

setStatus(session.status());

//session connected, start playback

playStream(session);

}).on(SESSION_STATUS.DISCONNECTED, function(){

setStatus(SESSION_STATUS.DISCONNECTED);

onStopped();

}).on(SESSION_STATUS.FAILED, function(){

setStatus(SESSION_STATUS.FAILED);

onStopped();

});

}

Playing the video stream is started using the createStream().play() API method. Upon playback, the Canvas HTML element that renders the video is embedded to the remoteVideo div element.

function playStream(session) {

var streamName = "12345";

var options = {

name: streamName,

display: remoteVideo

};

options.playWidth = 640;

options.playHeight = 480;

stream = session.createStream(options).on(STREAM_STATUS.PLAYING, function(stream) {

setStatus(stream.status());

onStarted(stream);

}).on(STREAM_STATUS.STOPPED, function() {

setStatus(STREAM_STATUS.STOPPED);

onStopped();

}).on(STREAM_STATUS.FAILED, function() {

setStatus(STREAM_STATUS.FAILED);

onStopped();

});

stream.play();

}

The following two things are hardcoded here:

1) URL of the server

var url = "wss://wcs5-eu.flashphoner.com:8443";

This is a public demo server of Web Call Server 5. If something is wrong with it, you should install your own for testing.

2) The name of the stream to play

var streamName = "12345";

This is the name of a video stream we want to play. If it’s a stream from an RTSP IP camera, we can set it as

var streamName = "rtsp://host:554/stream.sdp";

Not very noticeable yet important function:

Flashphoner.playFirstSound();

On mobile platforms, specifically on iOS Safari, there is a certain limitation of Web Audio API that prevents any audio playback until a user taps somewhere on the page. So when the Start button is clicked, we invoke the playFirstSound() method that plays a short piece of generated audio, so that the resulting video could play normally, with sound.

Finally, our custom minimalistic player consisting of four scripts an one HTML file named player-ios-safari.html looks as:

- flashphoner.js

- WSReceiver2.js

- video-worker2.js

- player-ios-safari.js

- player-ios-safari.html

The source code of the player can be downloaded here.

In conclusion, we told about existing alternatives to WebRTC for iOS Safari and studied out an example real time player based on the Websockets technology to send video. Perhaps, this example will help someone to wait for WebRTC arrival to Safari.

References

Press-release – Apple is rolling WebRTC for Safari

Websockets – RFC6455

WebGL – specification

Web Call Server – WebRTC server that can convert a video stream to Websockets to play it on iOS Safari

Install WCS – download and install

Run on Amazon EC2 – run a ready to use instance of the server on Amazon AWS

Source code – example of a player: player-ios-safari.js player-ios-safari.html

Web SDK – web API for WCS server containing flashphoner.js, WSReceiver2.js, video-worker2.js scripts.

Related articles

We release support for iOS Safari 11 browsers with the WebRTC technology

iOS Safari 11 now supports WebRTC

Embedding a WebRTC player for live broadcasts to a website

Flashphoner Presents HTML5 RTSP Video Player for Online Broadcasting in iOS Safari

Related features

Low-latency broadcasting of a WebRTC video stream to iOS Safari via the Websockets protocol