In the first part we have deployed a simple dynamic CDN for broadcasting WebRTC streams to two continents and have proved on the example of a countdown timer that the latency in this kind of CDN is actually low.

However, besides low latency, it is important to provide good broadcast quality to users. After all, this is what they are paying for. In real life the channels between Edge servers and users can differ in bandwidth capacity and quality. For example, we are publishing a 720p stream at 2 Mbps, the user is playing it on an Android phone using 3G connection in an unstable signal reception area and the 360p maximum resolution that provides smooth picture at 400 Mbps is 360p.

The devices and browsers the viewers are using may differ a lot. For example, we are publishing a WebRTC stream using VP8 codec in Chrome on PC and the viewer is playing the stream in Safari on an iPhone, which supports only H264 codec. Or vice versa, we are publishing an RTMP stream from OBS Studio, coding the video in H264 and the sound in AAC, and the client is using a Chromium-based browser which supports only VP8 or VP9 for the video and Opus for the sound.

We may need as well to enhance the quality of the initial publishing. For example, we are sharing a stream from an IP-camera in a natural park, most of the time the picture is static, and the camera is delivering it at 1 frame per second. However, we want to provide to viewers 24 frames per second. What is to be done, if there is no possibility to replace the camera or modify its settings?

In all these cases we will need to transcode the stream on the server, that is decode each received frame and then encode it with new parameters. Besides, the parameters to be modified are often only known on the client’s side. Let’s have a look at how to achieve transcoding in CDN while keeping a balance between broadcast quality and server load.

Transcoding: how, where and why?

Assume we are aware of the stream parameters the client wants to receive. For example, the viewer has begun playing the stream, and the number of frame losses in WebRTC statistics tells us that the resolution and bitrate need to be reduced before the client switches to another show. In this case. the stream will be transcoded by default on the Edge server the viewer is connected to

If the client does not support the codec used during stream publishing, the transcoding can be imposed both on Edge and Origin servers.

Both these methods only work as a temporary solution on condition that Origin and/or Edge server configurations are set with a margin. Trascoding is always performed frame by frame, therefore it is very demanding of the CPU resources. Thus, a single CPU core is able to transcode just a small number of streams:

| Resolution | Bitrate, Kbps | Number of streams |

| 360p | 1300 | 5 |

| 480p | 1800 | 3 |

| 720p | 3000 | 2 |

Even if we are going to run a single transcoding process for all the users needing equal media stream parameters, it is highly probable that a few viewers with different parameters will entirely consume the server resources.

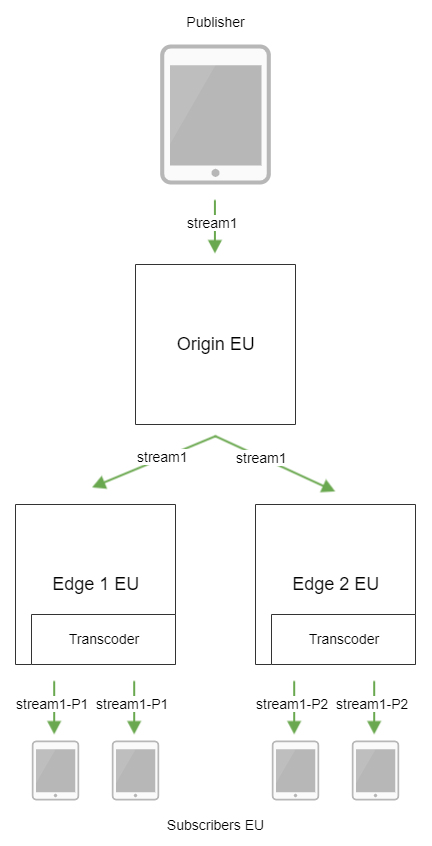

In this manner, the right decision is to set apart dedicated servers in the CDN to carry out the task of transcoding and choose the server configuration bearing in mind this task.

Adding Transcoder nodes into CDN

Now, we are going to deploy two Transcoder servers in our CDN: one in the European data center and one in the American

Setup of Transcoder servers:

- Transcoder 1 EU

cdn_enabled=true cdn_ip=t-eu1.flashphoner.com cdn_point_of_entry=o-eu1.flashponer.com cdn_nodes_resolve_ip=false cdn_role=transcoder

- Transcoder 1 US

cdn_enabled=true cdn_ip=t-us1.flashphoner.com cdn_point_of_entry=o-eu1.flashponer.com cdn_nodes_resolve_ip=false cdn_role=transcoder

Stream transcoding parameters should be described on Edge servers by means of special profiles in the cdn_profiles.yml file. As an example, look at the three profiles used by default:

- transcoding to 640×360 resolution, 30 frames per second, the keyframe will be sent every 90 frames, H264 video codec using OpenH264 encoder, Opus 48 kHz audio codec.

-640x360:

audio:

codec : opus

rate : 48000

video:

width : 640

height : 360

gop : 90

fps : 30

codec : h264

codecImpl : OPENH264

- transcoding to 1280×720 resolution, H264 video codec using OpenH264 encoder, with no audio transcoding.

-720p:

video:

height : 720

codec : h264

codecImpl : OPENH264

- transcoding to 1280×720 resolution, 30 frames per second, the keyframe will be sent every 90 frames, 2Mbps bitrate, H264 video codec using OpenH264 encoder, with no audio transcoding.

-720p-2Mbps:

video:

height : 720

bitrate : 2000

gop : 90

fps : 30

codec : h264

codecImpl : OPENH264

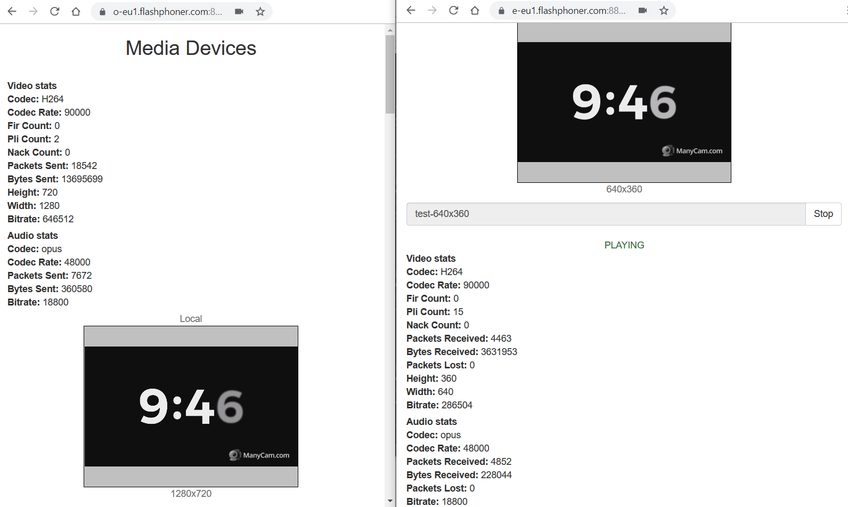

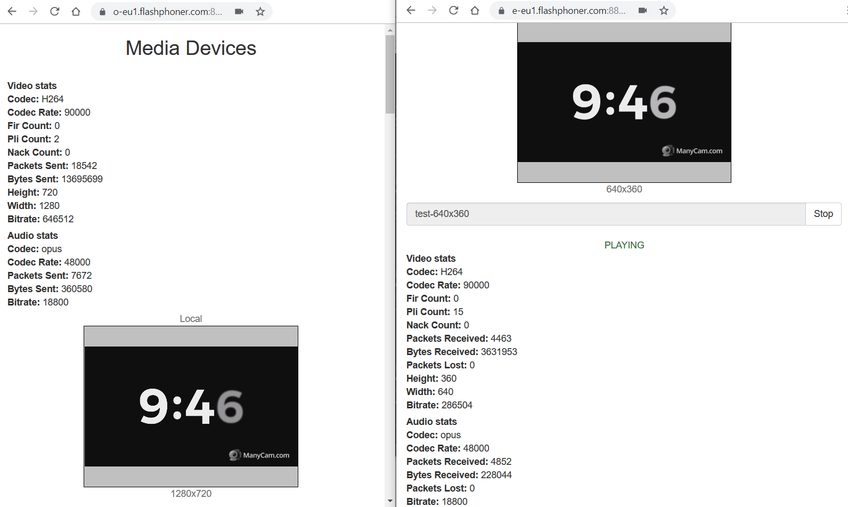

Let’s publish the 720p test stream on the o-eu1 server and play the stream on e-eu1 specifying the profile in the stream name, for example, test-640×360

The stream is being transcoded!

Now we can describe a number of profiles on Edge servers, for example, -240p, -360p, -480p and, if a large number of lost frames are diagnosed on the client’s side according to WebRTC statistics, we can automatically repeat the request for a lower resolution stream.

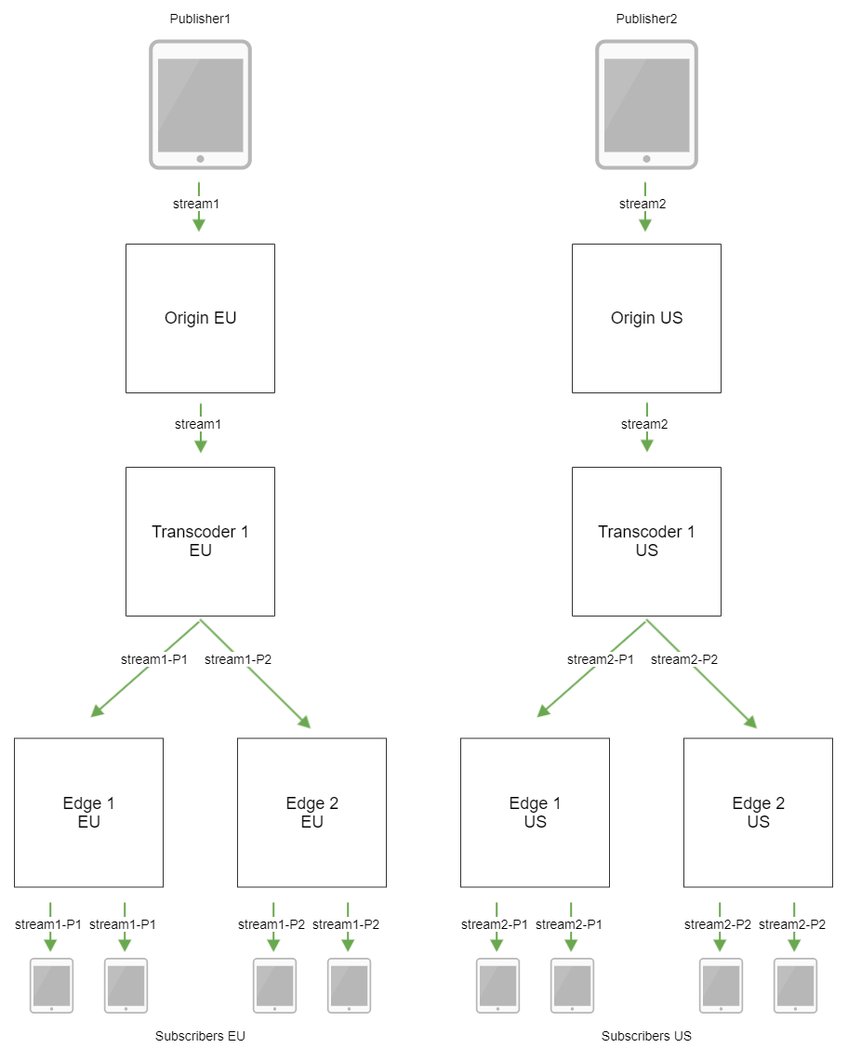

Grouping CDN nodes per continents

Currently we have peer Transcoder servers. But what if we want to transcode the streams based on the geographical spread: for the American viewers in America, for the European viewers in Europe? By the way, this will allow to reduce the load on the transatlantic channels, because the Origin EU server will transmit to America and receive back only the original streams rather than all the variants of transcoded ones

In this case, it is necessary to specify the required CDN group in the Transcoder node settings

- Transcoder 1 EU

cdn_enabled=true cdn_ip=t-eu1.flashphoner.com cdn_point_of_entry=o-eu1.flashponer.com cdn_nodes_resolve_ip=false cdn_role=transcoder cdn_groups=EU

- Transcoder 1 US

cdn_enabled=true cdn_ip=t-us1.flashphoner.com cdn_point_of_entry=o-eu1.flashponer.com< cdn_nodes_resolve_ip=false cdn_role=transcoder cdn_groups=US

Also, the group has to be added to Edge server settings.

- Edge 1-2 EU

cdn_groups=EU

- Edge 1-2 US

cdn_groups=US

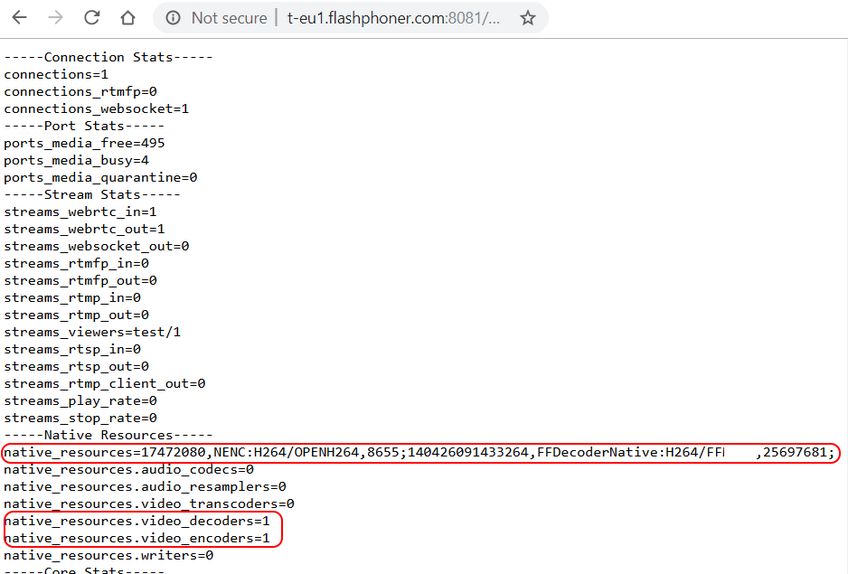

Let’s restart the nodes with new settings. Then, let’s publish the 720p test stream on the o-eu1 server and play this stream on e-eu1 with transcoding

In order to make sure that the stream is being transcoded on t-eu, we have to open the statistics page at http://t-eu1.flashphoner.com:8081/?action=stat, and we will see the encoder and the decoder in Native resources section

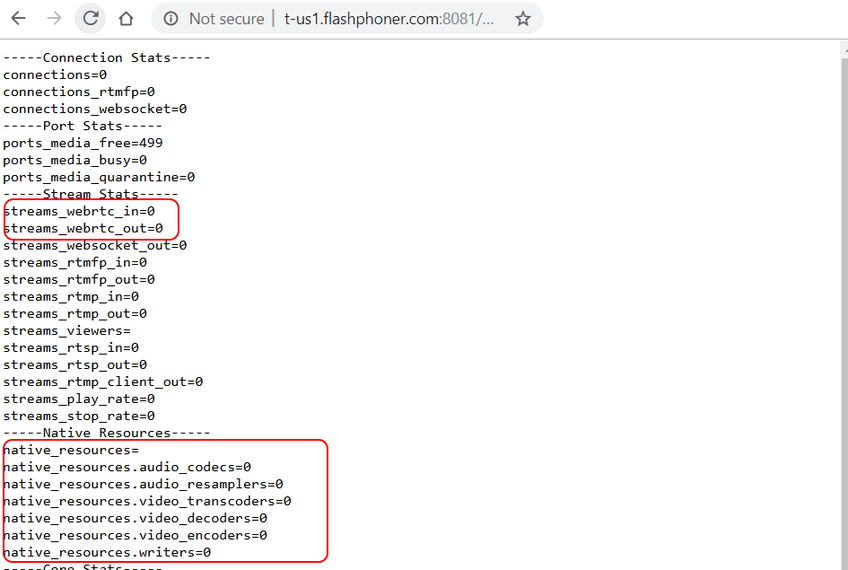

Moreover, there are no video encoders on t-us1 in the statistics

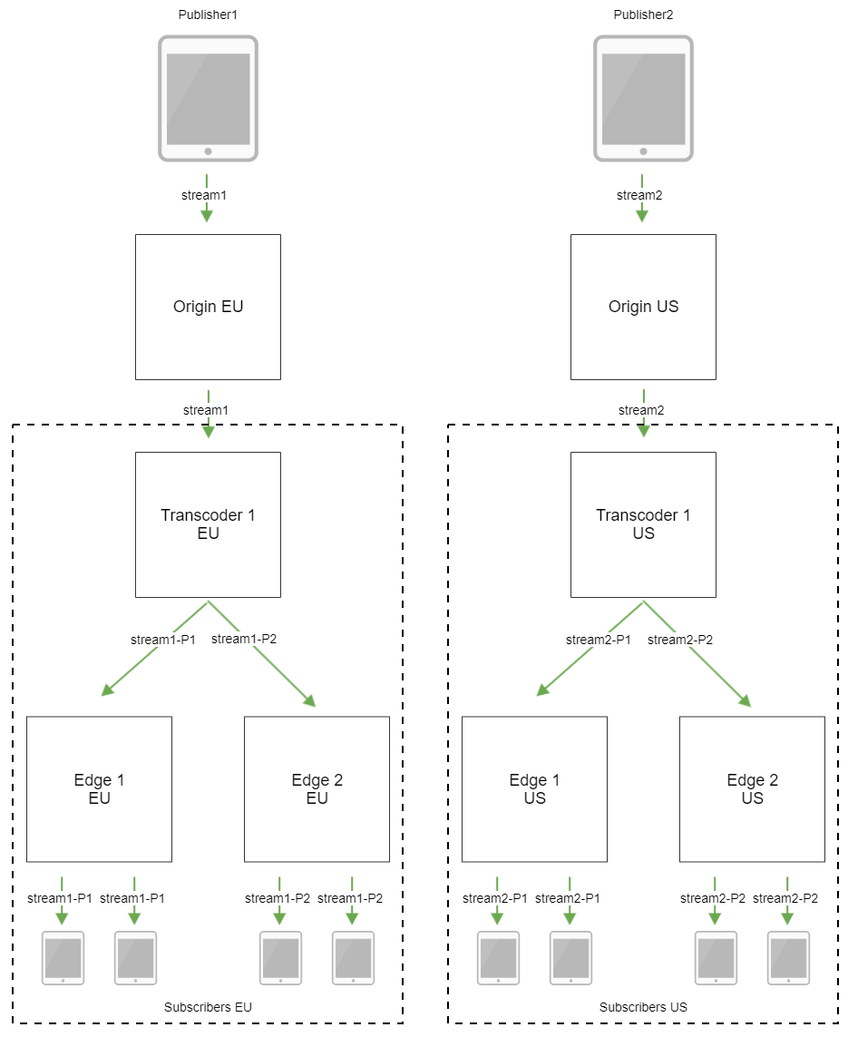

More transcoders: balancing the load

Assume the number of viewers keeps increasing and the capacity of one Transcoder server per continent is not enough anymore. Great, let’s add one more server for each continent

- Transcoder 2 EU

cdn_enabled=true cdn_ip=t-eu2.flashphoner.com cdn_point_of_entry=o-eu1.flashponer.com cdn_nodes_resolve_ip=false cdn_role=transcoder cdn_groups=EU

- Transcoder 2 US

cdn_enabled=true cdn_ip=t-us2.flashphoner.com cdn_point_of_entry=o-eu1.flashponer.com cdn_nodes_resolve_ip=false cdn_role=transcoder cdn_groups=US

Now, however, we experience the issue of balancing the load between two transcoders. To avoid transmitting all the streams through one server, we are going to set the threshold for the maximum allowable CPU load average on Transcoder nodes.

cdn_node_load_average_threshold=0.95

When the CPU load average divided by the number of available cores will reach this threshold, the server will stop accepting requests for transcoding new streams.

We can also set a limit to the maximum allowable number of simultaneously running video encoders.

cdn_transcoder_video_encoders_threshold=10000

When this number is reached, the server will also stop accepting requests for stream transcoding, even if the CPU load still allows it.

Anyway, Transcoder server will keep sharing the streams that are being transcoded on it to Edge servers.

To be concluded

To sum up, we have deployed in our CDN dedicated servers for transcoding media streams and, thus, are able to provide good broadcast quality to our viewers depending on the capabilities of their devices and the quality of the channels. However, we have not touched upon the subject of stream access restriction yet. We will have a look at this in the final part.