VOD corresponds to video on demand, as in playing video on YouTube or any other streaming service. WebRTC is real time low latency video. What is common between these two you may ask? Welcome.

Support and a bugfix

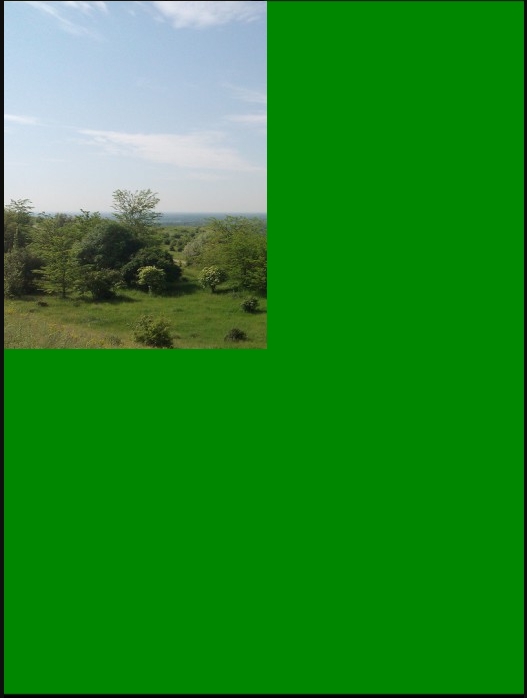

As always it all started with support. We received a support request from a programmer, supposedly from an outsourcing company, who took part in development of a mobile application for telemedicine health care. One of the wants of the client was recording WebRTC video chat in an iOS application with further playback in the same iOS application. Such recording did work, but when played using the standard iOS means, green artifacts appeared. Well, not exactly artifacts but straight rectangular areas of green consuming up to ¾ of the screen. Surely, this was unacceptable, so we started investigating the problem.

Playing the recorded WebRTC video via AVPlayerViewController

In the mobile video playing app we used standard components: MPMoviePlayer or AVPlayerViewController. Both can play an mp4 video when its URL is specified like this http://host/sample.mp4. These components played an MP4 video normally, but when it came to playing video recorded in the iOS application, the green area stayed on the screen and ruined everything.

WebRTC dynamically changes stream resolution

It turned out that the cause of these green artifacts is WebRTC’s capability to adjust video resolution on the fly. So, the mp4 file of the recorded chat ended up having wonderfully different frame dimensions. Specifically, a sequence of 640×480 resolution, then a sequence of 320×240 etc. Such non-typical videos play normally in VLC without artifacts or anything, but the components to play video playback via HTTP that are built into iOS produce green artifacts when the resolution of a video changes in the bit stream.

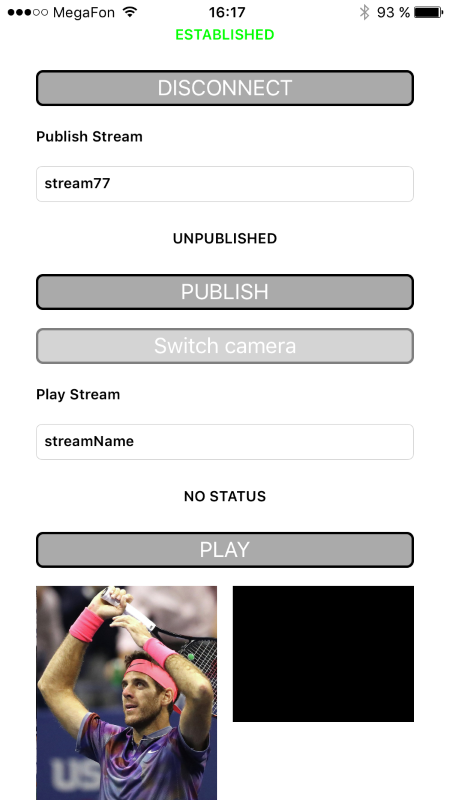

Now, let’s run a broadcast from an iOS application and make sure this is really the reason. For the test we will use our mobile iOS app – Two Way Streaming based on iOS SDK and the demo server WCS5-EU.

Here is how the application that streams video to the server looks like:

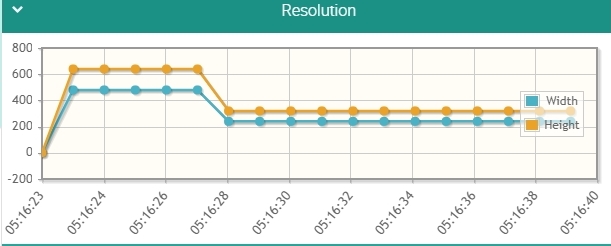

And here is how video resolution changes over time when sent from a mobile application:

From this video streaming timeline is evident that the resolution of the picture dynamically changes depending on the current capabilities of the mobile device to compress video, networking capabilities and so on. WebRTC adjusts resolution to achieve low latency.

Transcoding and rescaling

Possible solution could be rescaling with transcoding, that is decoding frames scaling them up or down to the same resolution, for instance 640×480 and recording the video in that resolution. But if we applied this method to every stream published on the server, CPU resources would deplete after 10-20 video streams. So we had to look for a non-transcoding solution.

WebRTC VOD as a solution

Then we thought: if WebRTC streams video with such changes in resolutions, it should be able to play the video recorded this way too. It appears that if we read an mp4 file and feed it to a browser or a mobile application via WebRTC, everything should be fine and the green rectangles on the iOS application screen should go.

What left was to implement reading of the recorded mp4 and passing it to the Web Call Server engine for further conversion to WebRTC. First tests proved the desired result was achieved – green rectangles were no more.

This way we received VOD that plays not only via WebRTC but across all supported protocols and technologies: RTMP, RTMFP, RTSP, HTML5 Canvas, Media Source, HLS, WebRTC.

WebRTC VOD Live broadcasting

Then, we asked ourselves a question: “What if users want to broadcast the video as a stream to everyone simultaneously?”

As a result we had to make two types of VOD.

The first one is personal VOD. For every user who wants to play the video a new channel is opened and the video starts playing from the beginning.

The second one is live VOD. If a user starts playing the video and another user connects later, they will watch the video a live broadcast, that is, the server will stream only one stream of the video that both users are connected to. So they can simultaneously watch a football game and make comments on it.

In our player and in the API you need to know the name of the stream to play it.

For VOD we created the following options:

When a user wants to play the video personally, he passes the name of the stream as:

vod://sample.mp4

And if the video stream is an online broadcast, we pass the name of the stream as follows:

vod-live://sample.mp4

The sample.mp4 should be in the WCS_HOME/media folder of the server and must be in the MP4 / H.264 + AAC format.

And what happened to the iOS application, you may ask.

It’s ok. The iOS application plays the VOD stream via WebRTC without any artifacts.

WebRTC VOD in a Web player

Now let’s see how WebRTC VOD looks in web. To do this, copy the mp4 file to the /usr/local/FlashphonerWebCallServer/media folder on the server. We’ll use the well-known Big Buck Bunny sample.mp4.

Open the test player page, specify the name of the stream as vod://sample.mp4 and click Test Now.

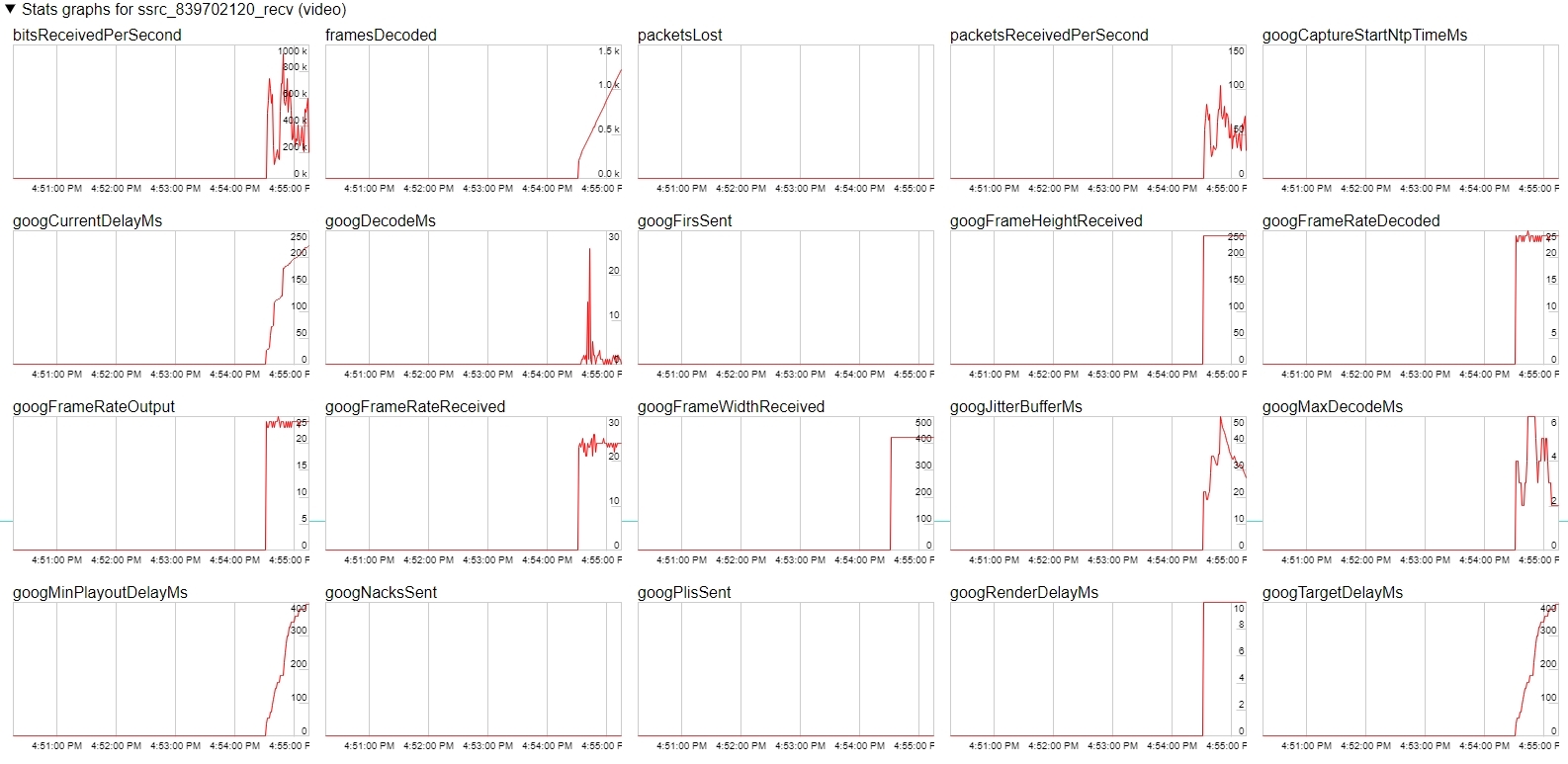

The player starts playing the stream via WebRTC. In chrome://webrtc-internals you can see playback graphs:

So, the story ended well. We fixed the bug with the green screen displayed while playing an mp4 record of a broadcast in the iOS application and made the WebRTC-VOD function for web browsers and mobile devices working on iOS or Android.

References

1. Test iOS application Two Way Streaming for broadcasts

2. Web player to play VOD – WebRTC streams

3. Web Call Server 5 that broadcasts mp4 videos via WebRTC