Let’s say we have 10 users who stream video from web cameras via WebRTC. We need to display thumbnails of their streams on one page.

Surely, we can simply display videos instead of still pictures, but let’s calculate the bitrate first assuming each stream consumes 1Mbps of the entire band. If all ten are playing, we end up with 10 Mbps. A little bit too much for previews, isn’t it?

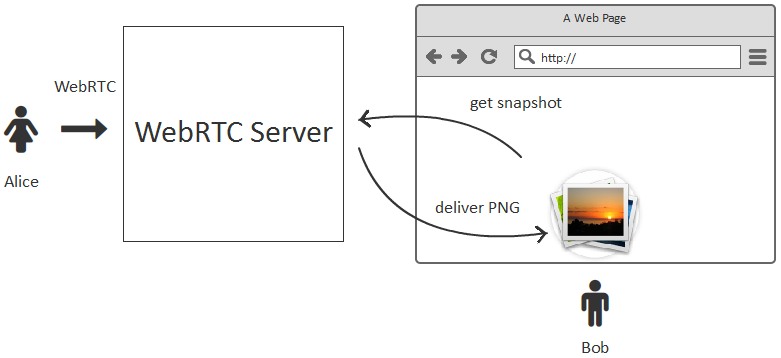

A better solution is to request the server for a frame of the video stream, receive this frame as a PNG image and then display the image in a browser.

At first, we implemented downloading of a PNG image by request.

- Make an asynchronous request stream.snapshot()

- The server saves the image in the file system.

- The user who requested the snapshot receives a link to the image via http and can embed it to the page with <img> HTML tag.

The solution worked, but didn’t seem elegant. Perhaps, because we would have to store thumbnails on the server or invent some way to upload them to Nginx or Apache for further static downloading by clients.

As a result, we decided to wrap PNG into Base64 and send the snapshot to the client this way. With the approach like that, files are not downloaded from the server via http. The content of the file is sent to the client via Websockets.

Here is a step-by-step description of the process with some chunks of JavaScript code:

1. Alice sends a video stream from the web camera to the server via WebRTC and calls it stream1.

session.createStream({name:"stream1"}).publish()

2. Bob knowing the name of the stream can request a snapshot using the following method:

var stream = session.createStream({name:"stream1"});

stream.snapshot();

To receive a snapshot, Bob adds a listener that inserts the Base64 picture to the <img> element on the page:

stream.on(STREAM_STATUS.SNAPSHOT_COMPLETE, function(stream){

snapshotImg.src = "data:image/png;base64,"+stream.getInfo();

}

The full sources of this example is available here.

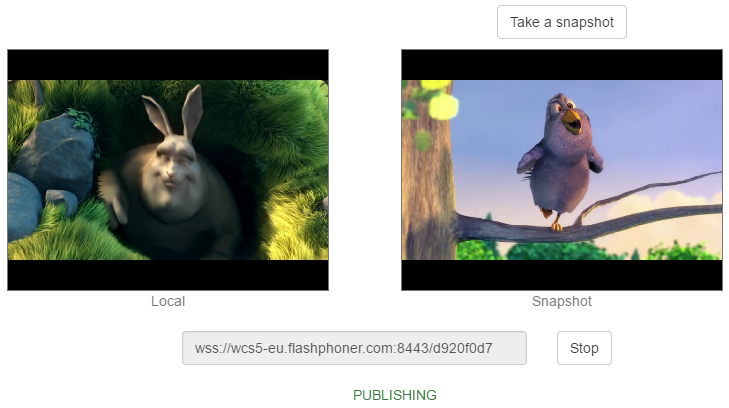

In this example you can send a video stream to the server and then receive snapshots of this video stream by clicking the Take button periodically. Of course, this can be automated with an appropriate script that will take snapshots of video streams, for example, once per minute.

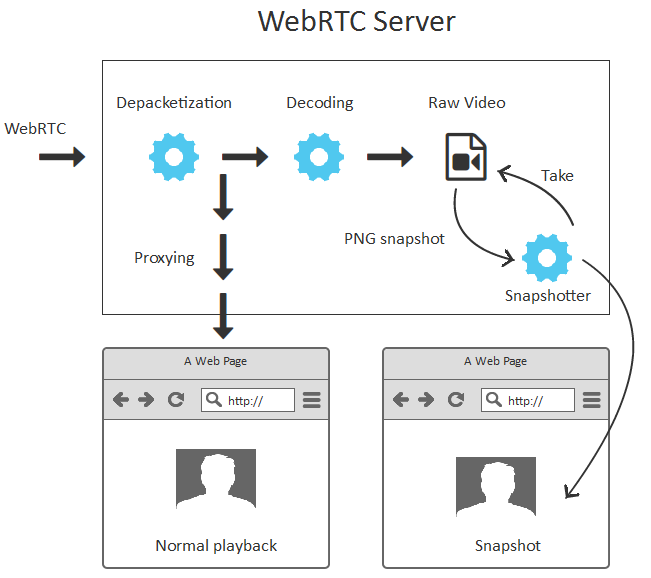

On the server side, to receive a snapshot we need to decode the video stream. The server depacketizes and decodes the inbound WebRTC video stream, and makes snapshots of the footage. The diagram illustrating both normal playback and decoding is shown below:

For normal playback streams are not decoded and goes to the client as is. If a snapshot is requested, it is taken from the decoded branch of the video stream.

To work with snapshots we use the WebRTC server Web Call Server 5